Every cloud budget has a few black holes—S3 backups are one of them. You configure versioning, add Object Lock for compliance, and expect smooth sailing. But then the bill arrives, and suddenly you're asking: Why are we paying so much for backup storage?

The answer usually lies in hidden behaviors and overlooked defaults that silently drive costs. This guide breaks down exactly where those charges come from and how to fix them. And for even more on this topic, join our upcoming live session on How to Cut Cloud Data Retention Costs.

4 Hidden S3 Backup Costs (and How to Fix Them)

1. S3 Versioning and Object Lock

Versioning saves every object change. Object Lock can prevent deletions. Combined, they create a compounding cost problem:

- Old versions pile up.

- Locked objects stay billable.

- Lifecycle rules rarely clean everything up consistently.

Real Talk: Lifecycle policies are great in theory, but in practice, they’re often misapplied or forgotten across siloed teams.

2. Multipart Upload Leftovers

Abandoned multipart uploads accrue costs. They’re invisible in most dashboards and persist unless explicitly cleaned up.

Why it happens: Developers initiate large uploads, but if they fail or get interrupted, those parts linger.

3. Request Costs (GET, PUT, LIST, etc.)

Each API call costs fractions of a cent. However, with millions of objects, especially in log-heavy or analytics workloads, these costs add up quickly.

Common mistake: Frequent listing or PUT operations from automated jobs or pipelines that don’t batch effectively.

4. Cross-Region Replication and Data Transfer

Replicating backups across regions adds egress and storage costs, often without clear visibility.

Red flag: Teams often configure replication once and then forget about it, even when workloads no longer require it.

Related Article: How to Cut Your AWS S3 Costs: Smart Lifecycle Policies and Versioning

S3 Storage Management Tips That Actually Save Money

Use Lifecycle Policies, But Be Sure to Track Them

Automated expiration and transition rules can reduce costs—if they’re applied correctly. Review regularly and validate they’re hitting all object prefixes and versions.

Choose the Right Storage Tier

Archive Carefully

Glacier sounds cheap—until you retrieve too often or delete early. Many teams miscalculate the actual access patterns.

⚠️ Only archive what you’re sure won’t be needed soon.

Monitor and Alert Proactively

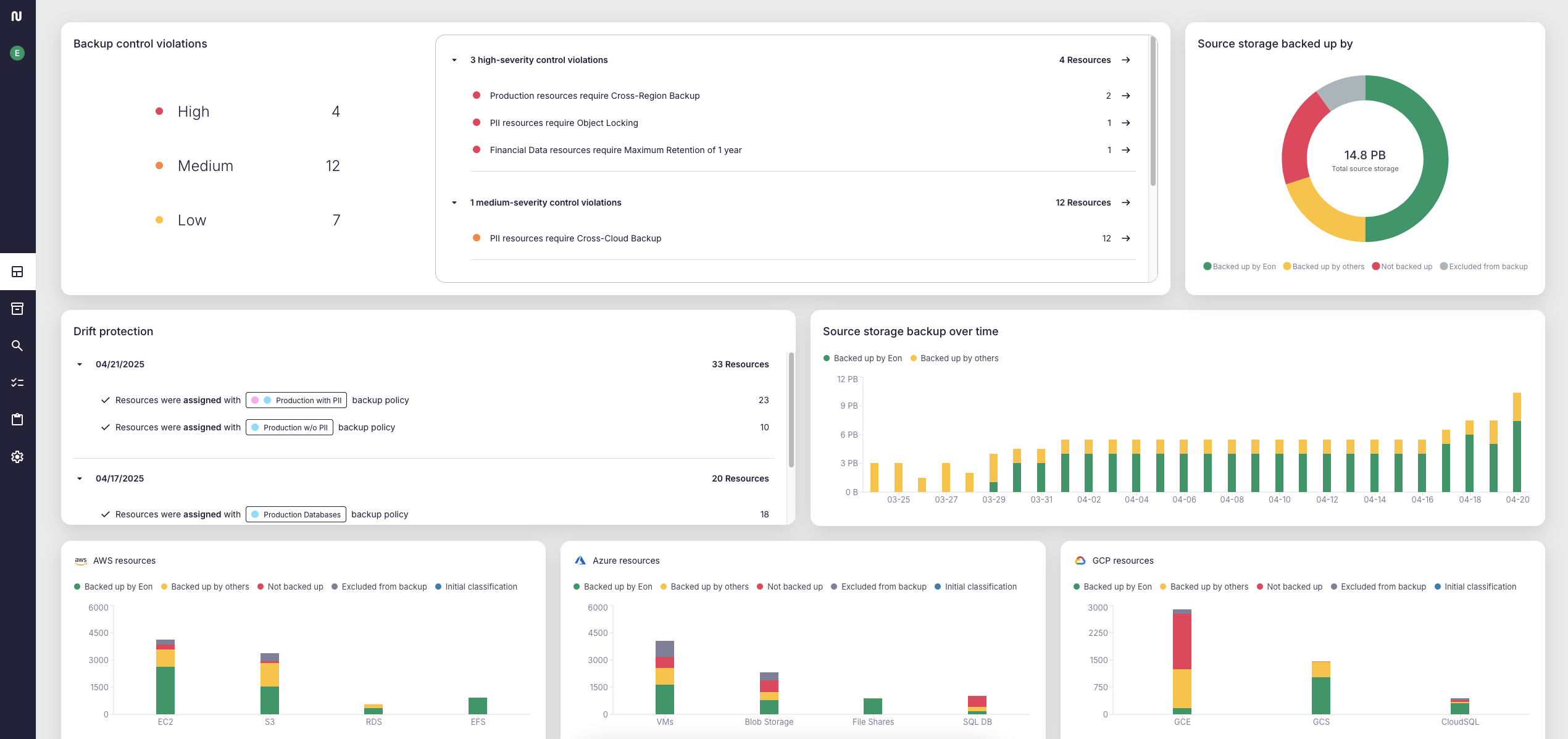

Use AWS Cost Explorer or Eon’s analytics to:

- Track cost spikes

- Spot anomalies across buckets

- Forecast future spend based on current behavior

Related Article: How to Protect Your S3 Backups: Advice from an AWS Storage Expert

S3 Backup Mistakes That Drive Up Costs

Misusing Glacier

Accessing cold storage too often? You’re negating the savings.

Misconfigured Intelligent Tiering

Without accurate access patterns, automatic transitions can backfire. Review logs, test setups.

Small Files, Big Costs

Millions of small files = millions of API calls. Bundle data when possible. Use Parquet or ORC instead of CSV or JSON for backups.

Missed VPC Endpoints

Transferring data over the public internet adds avoidable costs. Use S3 VPC Gateway Endpoints where possible, but be aware that they incur per-GB transfer fees.

How Eon Reduces S3 Backup Costs Automatically

S3 gives you the pieces. Eon gives you the system. We bring visibility, automation, and intelligence to your backup cost strategy:

- Real-time analytics across all buckets and storage classes

- Automated enforcement of lifecycle policies and usage guardrails

- Incremental deduplication to reduce versioning bloat

- Tier-aware retention controls based on real access behavior

- Integrated reporting to help FinOps and engineering align on cost drivers

- Delta Lake and Parquet support for analytics-ready backups that reduce small object overhead

Eon vs. Native S3 Versioning

S3 Backup Cost Control: Final Takeaways

Optimizing your S3 backups isn’t about guesswork or manual audits. It’s about control.

With Eon, you reduce cost, increase visibility, and keep backups secure and recoverable without lifting a finger.

Want to see how? Schedule a demo and we’ll show you.