Resources

All the latest, all in one place. Discover Eon’s breakthroughs, updates, and ideas driving the future of cloud backup.

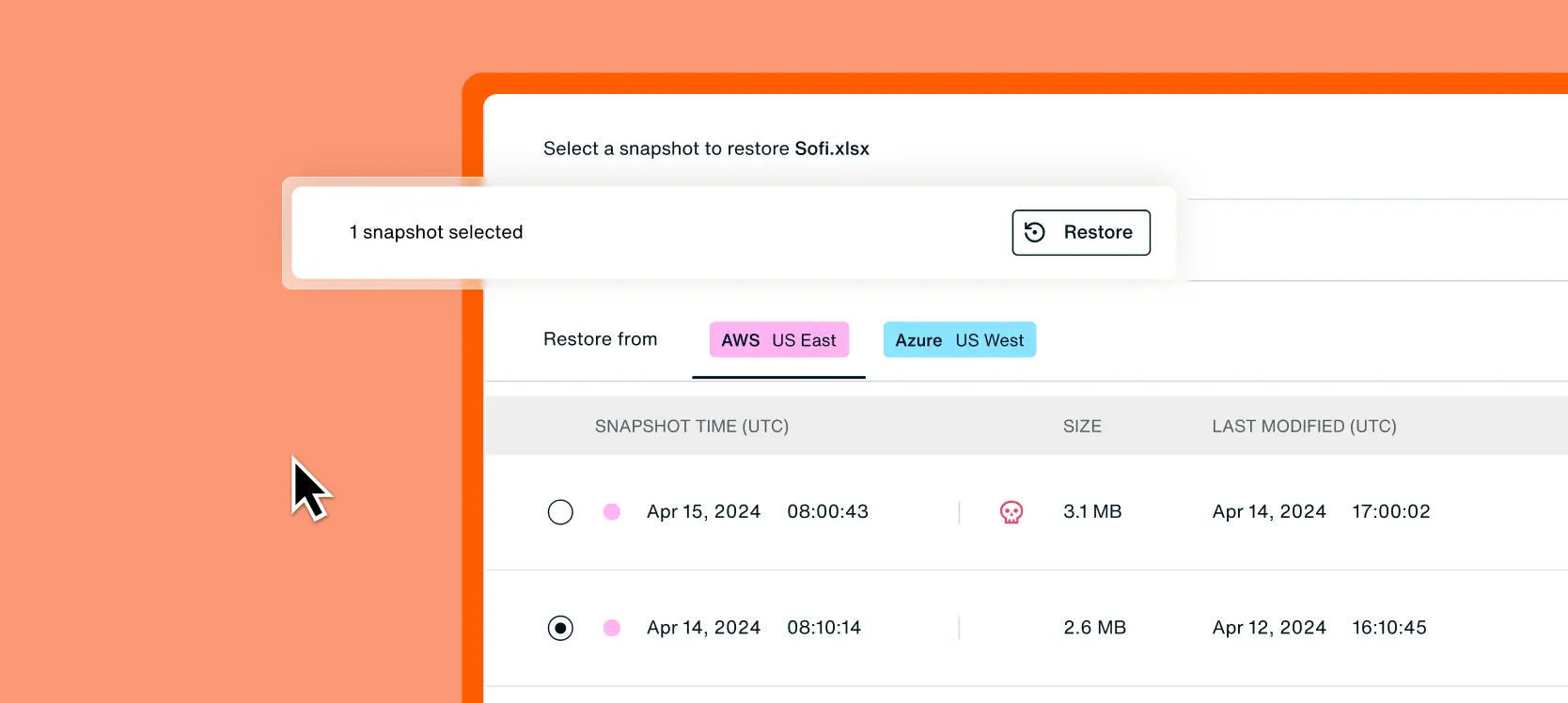

How SoFi Achieved Multi-Region Resilience and >100% ROI in the First Year with Eon

“We save more with Eon than we spend. I never thought I’d say that about a backup provider.”

—CJ Keefe, Director, Corporate Infrastructure, DevOps & SRE, SoFi

About SoFi

SoFi (NASDAQ: SOFI) is a cloud-native financial institution that operates entirely on AWS, running a large multi-region environment designed to support business users and ensure high availability (HA) and disaster recovery (DR), all while meeting strict data residency and compliance requirements.

The Challenge

Before Eon, SoFi’s teams were running into issues that slowed recovery and complicated compliance across five AWS regions:

- Fragmented backups across regions: Manual updates and native snapshots created gaps and inconsistencies.

- Slow compliance updates: Retention changes, including those tied to student-loan servicing workloads, could take hours or days to apply.

- Unpredictable recovery: A prior firewall outage exposed snapshot limitations and resulted in a full-day recovery delay.

- Limited visibility: Teams couldn’t easily see what was protected or validate backup posture at scale.

With a multi-region architecture designed for HA, DR, and business performance, these gaps made it difficult for a regulated financial institution to maintain consistent resilience and compliance across regions.

Why Eon

Eon aligned perfectly with SoFi’s strategy: Cloud-native, agentless, and built for automation at scale. But the differentiators that mattered most came directly from SoFi’s experience:

Agentless, zero-infrastructure deployment

No agents, no scripts, no regional setup. Everything ran through cloud-native APIs.

Auto-discovery and data classification across all AWS regions

Eon instantly mapped SoFi’s resources across five regions, making deployment nearly invisible.

Instant compliance agility

Retention and policy updates were applied in seconds, rather than hours or days.

“We had to update a retention policy for a new student-loan servicing requirement. By the time I hung up, it was done.”

Reliable, fast recovery

Eon removed SoFi’s dependency on fragile native snapshots, taking recovery time from a full day to minutes.

Fast time to value

Full multi-region rollout completed in a single sprint cycle.

“Most vendors disappear after the deal. Eon stayed in lockstep—every request and compliance tweak handled live.”

The Solution

Eon replaced SoFi’s snapshot-heavy setup with an agentless, automated backup layer that worked across all five AWS regions without scripts, tagging, or regional configuration.

Using auto-discovery, the Eon platform mapped SoFi’s AWS environment in minutes and applied policy-driven protection across accounts and workloads—immutability, encryption, region-specific retention, and logical air-gapping by default.

Backups now live in an immutable, logically air-gapped vault, separate from native snapshots and instantly accessible for search, querying, and restores. SoFi’s previous tool required tickets and waiting. Now, with the Eon platform, everything is fast, self-service, and fully reliable.

Eon also delivered deep data classification and ransomware detection, giving SoFi’s security team new levels of visibility and control.

“My security team’s obsessed with the classification and ransomware protection. It’s the control they’ve wanted for years.”

The Results

Cost Efficiency

- Over 100% ROI versus the previous solution.

- Expanded protection across the entire AWS environment without extra overhead.

Speed & Simplicity

- Full multi-region deployment in under four weeks.

- A recovery process that once took a full day now finishes in under five minutes.

- Engineers can self-serve restores—no AWS tickets, no waiting.

Compliance & Security

- Stronger adherence to PCI and other regulatory retention policies.

- Audit logs and immutability are built into every backup vault.

- Cyber teams rely on Eon’s ransomware protection and data classification to stay ahead of risk.

“We’re always looking at how to make data more intelligent and policies more contextual. Eon is helping us get there.”

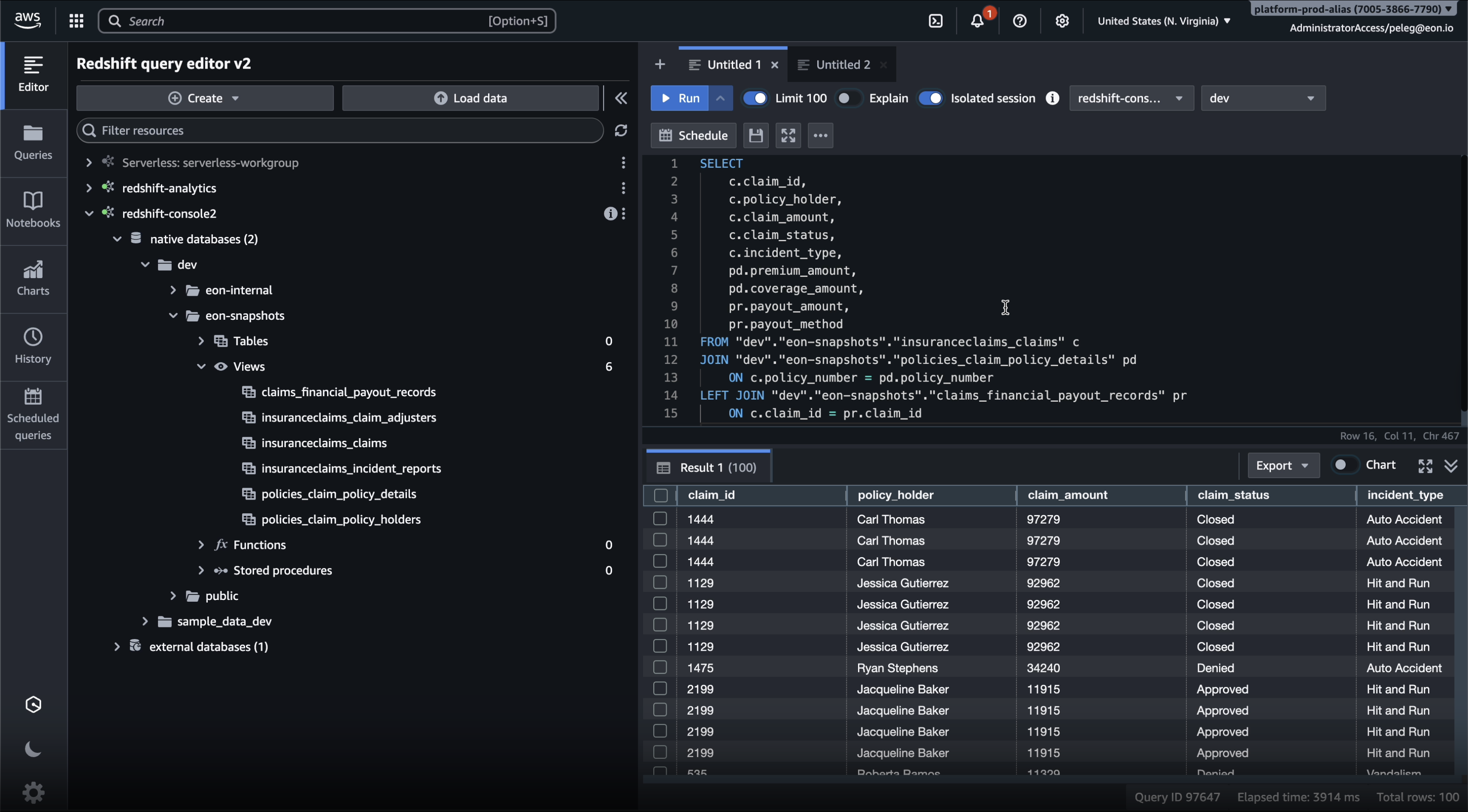

How to Activate Backup Data in Amazon Redshift Without Restores or ETL

Eon integrates with Amazon Redshift, allowing you to query database backup data in place.

Why use backup data for analytics?

Because backups already hold any critical data across the organization.

They include long-range context and consistent point-in-time versions that production systems rarely preserve. When that data becomes usable:

- You answer historical questions without rebuilding environments.

- You cut out redundant analytics copies and pipelines.

- You work from a single governed source of truth.

- You reuse the same datasets for investigations, validation, compliance, and BI.

What does it mean to query backups in Amazon Redshift?

It means backups are no longer expensive and useless. Instead, they form a rich data lake that already holds critical data, instantly ready for analytics or AI/ML workflows.

Eon stores database backups as deduplicated tables in Amazon S3. With the Redshift integration, those tables appear directly in Redshift as point-in-time datasets you can query immediately.

If you want to see how a table looked last week, validate a change, or compare before-and-after states, you query the backup itself rather than a rehydrated environment.

Key benefits of querying backups in Redshift

- Instant point-in-time analytics without waiting for restores

- No ETL pipelines or duplicate analytics clusters

- Trusted baselines for audits, investigations, and debugging

- Lower cost by eliminating redundant environments and copies

- Cross-cloud context via Eon’s unified catalog when needed

How the Redshift integration works

A simple, AWS-native flow:

- Eon continuously stores backups as deduplicated Hive-partitioned tables.

Eon introduces a modern backup format that consists of Hive-partitioned tables stored in Amazon S3. - You choose which backups to share with Redshift.

Eon grants read-only access while preserving immutability and governance controls. - Redshift queries in place.

Amazon Redshift discovers and queries the tables directly in S3.

The result: Redshift treats your backups as point-in-time datasets ready for immediate analysis.

What teams do with Redshift-queryable backups

Analytics and BI without rebuilds

Run Redshift queries on historical snapshots to validate changes, investigate incidents, or analyze trends without restoring anything first.

Faster audits and compliance checks

Search and query long-retained point-in-time data directly, instead of waiting on exports or restore jobs.

Operational insight and investigations

Backups provide a clean historical truth for debugging, root-cause analysis, and validating system behavior over time.

How Eon keeps backups usable and secure

Making backups queryable does not mean making them risky.

Baseline protections stay on by default:

- Immutable backups

- Logical air gaps

- Read-only analytics access

- RBAC and audit logs

- Autonomous Cloud Backup Posture Management (CBPM)

Analysts get governed access to historical truth. Security teams stay in control.

Want to see this live?

Get a demo, and we’ll walk you through Redshift-queryable backups end to end.

.png)

NETGEAR Cuts Backup Costs 35% and Accelerates 10TB Recovery by 88% with Eon

Switching to Eon gave us the cloud visibility and recovery speed we’d been missing for years.

—Satish Nair, Sr. Manager IT, NETGEAR

About NETGEAR

NETGEAR is a global provider of networking and connectivity solutions with a growing AWS footprint across EC2 workloads and large SQL Server databases. As cloud adoption accelerated and new acquisitions expanded their environments, NETGEAR needed a unified, cloud-native approach to control costs, improve visibility, and shorten recovery times across the business.

The Challenge

After nearly eight years of using a legacy provider in a traditional data center environment, the shift to AWS exposed several limitations:

- Limited visibility into backup spend: Previous tools provided little transparency into actual backup costs or usage.

- Slow recovery: Instances larger than 10TB could take up to 24 hours to recover, significantly impacting recovery objectives.

- Operational overhead: Multiple components and moving parts increased both cost and management burden.

- Delayed improvements: Requests for critical features often went unanswered, slowing modernization efforts.

- Corporate cost pressure: Leadership mandated meaningful infrastructure savings and greater control over cloud spend.

Their previous solution wasn’t built for cloud scale, and visibility gaps made cost control and DR planning increasingly difficult.

Why Eon

Cloud-native from day one

NETGEAR chose Eon because it was purpose-built for cloud workloads rather than retrofitted from on-premise architecture, with the added goal of leveraging backup data as a backend data lake to generate insights using AI technologies.

Eon enables backups that reflect the way our cloud truly works—faster, more transparent, and easier to operate.

Real-time cost clarity

Eon’s Cost Explorer gave NETGEAR instant insight into spend by resource and application, eliminating the delays and inaccuracies of manual reporting.

Eon heard our requirements and mobilized the right team to deploy the solution, adding NETGEAR-requested features in days—flawlessly and without errors.

A simple, fast deployment

Eon integrated cleanly into their AWS environment. The same team that managed their previous tool deployed Eon in under a week with virtually no retraining.

The Solution

NETGEAR deployed Eon across its AWS workloads as part of a broader shift to a cloud-first backup strategy. With Eon, NETGEAR now benefits from:

- A cloud-native, agentless architecture aligned with AWS best practices

- Optimized recovery for large, business-critical databases

- Real-time spend visibility through Cost Explorer

- Automated coverage and posture monitoring aligned to Cloud Backup Posture Management (CBPM) principles

- Monthly cadence reviews covering usage, cost, and roadmap updates

The Results

35% reduction in backup storage costs

Eon’s storage model and automated policy management immediately reduced NETGEAR’s backup spend, helping them meet a company-wide cost mandate.

88% faster recovery for a 10TB SQL Server database

Recovery dropped from 24 hours to under three, strengthening their disaster recovery posture and reducing operational risk.

Cutting 10TB recovery from almost a full day to a few hours strengthened our confidence in our disaster recovery strategy.

Accurate, real-time cost visibility

Cost Explorer eliminated manual reporting and enabled chargeback by instance, application, and team.

Operational simplicity from day one

Deployment was quick, onboarding was minimal, and the team immediately gained clearer visibility and easier day-to-day management.

A partnership that accelerates innovation

The speed, transparency, and collaboration continued beyond deployment, giving NETGEAR confidence in both the platform and the team behind it.

.jpeg)

How to Prepare for the Next Cloud Outage

Cloud outages were up by nearly one-fifth in 2024, according to a Parametrix report, and every one of them was a reminder that anything can fail—even the most trusted platforms. Outages disrupt operations, revenue, and customer trust across every industry. Whether you’re running healthcare systems, employee payroll, or SaaS infrastructure, downtime can have real business and human impact.

This article shares practical, cost-aware steps cloud teams can take to strengthen their cloud outage preparedness and stay online when the next outage hits.

Why do traditional backup and DR plans fail during cloud outages?

Because most backups live in the same cloud and depend on the same control plane, they go offline when the provider does.

Most teams understand outages are inevitable—but the real problem isn’t data loss, it’s data inaccessibility. Even with multi-AZ or cross-region replication, teams often face Insufficient Capacity Errors (ICE) when everyone rushes to restore at once.

During major outages, even teams with cross-region DR plans often hesitate to act. Many assume the provider will recover faster than they can rebuild, given the complexity of redeploying full environments—networks, compute, and permissions—just to reach their backups. Others worry the secondary region won’t have enough capacity to handle the surge of recoveries, creating a ripple effect that stalls recovery altogether.

So, the real question isn’t “How fast can we recover?”—it’s “Can we access our data when we can’t access the cloud?”

What does real cloud outage preparedness look like?

True cloud outage preparedness means your data remains accessible, portable, and recoverable, without waiting for your provider to come back online.

Being “ready” isn’t just about a DR plan on paper. It’s about being able to:

- Query your backup data even if a cloud console or API is down

- Recover individual tables, objects, or files instantly

- Restore to another region—or another cloud—without rewriting your architecture

If those actions aren’t possible today, your backups are still dependent—not built for continuity.

Why mindset matters as much as architecture

Technology isn’t the only reason outages hurt. In critical moments, organizations freeze.

Engineers know what should happen—spin up the secondary region, validate access—but approval chains, budget freezes, and risk aversion often slow everything down.

That’s why outage planning isn’t only about infrastructure. It’s about designing processes that remove hesitation, giving teams safe, automated ways to access data when everything else feels risky.

How can you build cloud outage-ready backups?

Start by focusing on data accessibility in addition to full environment recovery.

Step 1: Rethink where your backups live

Don’t rely solely on local copies in the same region as production. Ensure at least one copy exists in another region—and ideally, it’s written directly to that region rather than duplicated locally first.

Step 2: Make accessibility the goal

You shouldn’t need to rebuild infrastructure just to view data. Backups should be searchable, queryable, and immediately usable.

Step 3: Extend your recovery surface

Cross-region isn’t enough if both regions belong to the same provider. Add cross-cloud options (AWS ⇆ Azure ⇆ GCP) to stay reachable even during wider disruptions.

These practices form the backbone of a strong cloud resilience strategy—one designed for real-world workloads, not ideal scenarios.

Step 4: Keep your continuity plan practical

Resilience doesn’t need to double your infrastructure cost. Traditional cross-region backups require keeping two full copies—one local, one remote—doubling storage and network costs. Eon avoids that by writing directly to the remote region, eliminating redundant local storage. Combined with incremental snapshots and compression, this architecture reduces cross-region spend without sacrificing restore performance.

How do native cloud tools handle this today—and why is it so costly?

Each major provider (AWS, Azure, Google Cloud) offers native snapshot and replication tools, but they all share the same limitation: backups are stored and managed inside the same control plane that can fail.

To maintain true region isolation, you need to replicate snapshots or objects across regions manually—or automate the process with scripts. That means managing multiple policies, storage accounts, IAM roles, and egress costs.

It works, but it’s expensive and hard to maintain. Teams pay for duplicate data copies, cross-region transfer fees, and the ongoing management of complex retention policies and IAM roles.

Many teams accept the risk—not because they want to, but because true multi-region disaster recovery has been hard to achieve without major cost or complexity.

Eon removes those constraints by automating region- and cloud-level redundancy while reducing data transfer and storage duplication.

How does Eon simplify cloud outage readiness?

Most tools stop at replication. Eon goes further—turning backups into live, portable data assets that stay accessible across clouds and regions.

Here’s how Eon makes outage readiness simple, efficient, and accessible without changing how you already back up data.

- Independent control plane: Manage and restore data even when a cloud provider’s console is offline.

- Cross-region and cross-cloud backups: Stored in open, queryable formats (Apache Parquet) with metadata cataloging via Delta Lake and Iceberg.

- Continuous data access: Query backups instantly—no rehydration, no provisioning, no waiting.

- Cost efficiency: Backups can be stored once and accessed anywhere, reducing duplicate storage without sacrificing coverage. Eon supports both single- and multi-copy approaches to meet compliance or performance needs.

Together, these capabilities simplify even complex multi-region disaster recovery and give teams control over their data—no matter what happens behind the scenes.

A global fast-food chain used Eon to keep analytics and billing systems online during a regional cloud disruption.

What to Do Before the Next Cloud Outage: Your Cloud Outage Recovery Plan

Test now, not during the incident.

- Map your backup dependencies: Where does your control plane actually live?

- Test accessibility: Can you query or recover if your main region goes down?

- Add a cross-region or cross-cloud target: One remote copy dramatically cuts risk.

- Define “outage mode”: Decide who acts first and how you’ll access data.

- Run outage drills: Measure time to access data, not just restore.

- Review cost and coverage quarterly: Keep your continuity plan sustainable.

Whether you’re multi-region, multi-cloud, or just getting started, the most important step is testing. Outage resilience isn’t theory—it’s practice.

Eon keeps your data continuously accessible across clouds and regions, so you can keep working without waiting for recovery.

Your backups stay live, searchable, and ready to restore—no matter what happens in the cloud.

Request a demo to see how teams stay online when the unexpected hits.

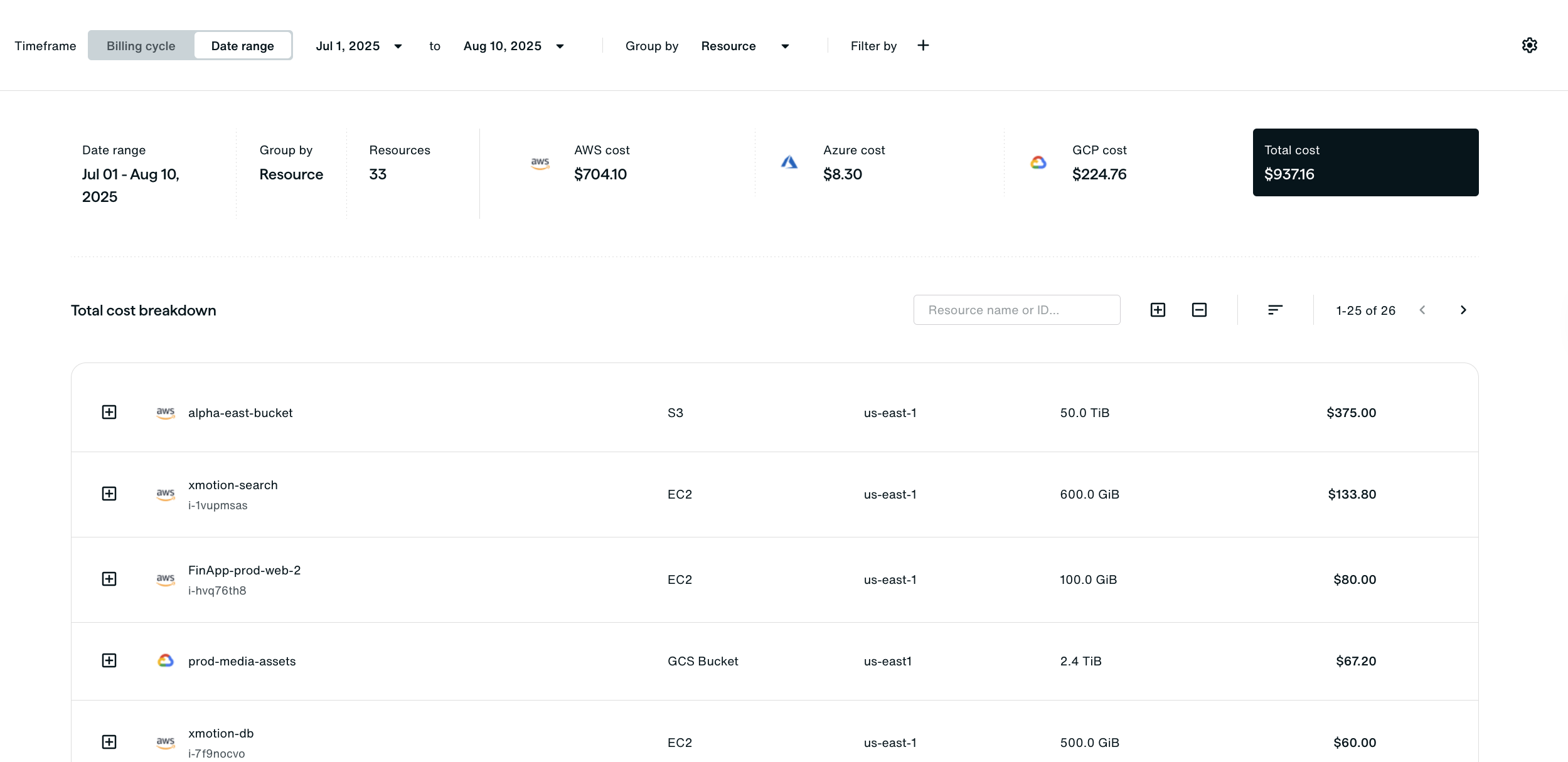

Eon Cost Explorer: Instant Visibility Into Backup Spend Across Clouds

The Problem: Cloud Backup Bills Are Hard to Read

Cloud provider bills are massive: thousands of line items, multiple accounts or subscriptions, and native cost explorers that lump backup costs in with everything else. And all that spend often grows unexpectedly due to backup sprawl or outdated snapshots. Multi‑cloud teams often end up juggling:

- Multiple logins and dashboards

- Endless CSV exports

- Homegrown spreadsheets that still leave questions unanswered

Eon’s Solution: Cost Explorer API and Dashboard

Eon’s new Cost Explorer API and Dashboard empower you to see exactly who’s driving backup costs, spot anomalies in minutes, and allocate spend to the right teams, all without spreadsheets or manual reporting. Drill down with the API, or get at‑a‑glance trends in the Dashboard for fast, confident chargeback and cost control.

One customer told us that reconciling a 5,000‑line Azure bill to a single backup server used to be almost impossible. With Eon, they can now roll up that insight to management in minutes and even spot opportunities to archive or optimize storage.

Because Eon is a fully managed SaaS backup solution, your Cost Explorer view reflects the entire cost of your backups, including storage and compute, without hidden charges buried in provider invoices. You’re not running backup jobs in your own accounts, so nothing is missing from the bill.

How to Track Backup Costs by Account, Resource, and Cloud

Eon Cost Explorer was built for CloudOps and FinOps teams who need clear answers fast. Here’s how it works.

Step 1: See Backup Spend at a Glance

Open the Cost Dashboard, and you immediately see:

- Which accounts or business units are spending the most

- Which resource types (VMs, storage, databases) drive that spend

- Trends over your billing cycle, so spikes are obvious

Step 2: Drill into High‑Spending Accounts

From the dashboard, you can jump into Cost Explorer to investigate further. Grouping by account quickly confirms which team or business unit is responsible for the bulk of the spend.

A common use case is chargeback: Central IT wants to bill each team for the backup resources they control. Before Eon, that meant weeks of manual reporting; now, teams can generate an account-level backup report in minutes and send it straight to the owner.

Step 3: Identify High‑Cost Backup Resources and Anomalies

The real power comes when you drill down to individual resources.

You might notice that one Azure subscription is spending far more on backups than expected. By grouping by resource type or even resource ID, you can pinpoint the culprit—maybe a restore environment with multiple 1 TB disks or a forgotten bucket eating up storage costs.

One team we worked with uncovered exactly that. They identified a single oversized VM as the primary cause of a significant spike in Azure backup spend. Archiving unnecessary data and adjusting the backup policy saved them thousands.

Step 4: Automate and Integrate with the Cost Explorer API

For teams who need more than an at-a-glance view, use the Cost Explorer API to:

- Pull backup cost data in multiple formats and views

- Integrate with FinOps platforms or homegrown cloud management tools

- Schedule exports of custom reports for finance or leadership

- Filter by tag, resource, account, or cloud to drill into specific cost drivers

Access is controlled via Eon’s RBAC Data Access Control, so you can create users who only see costs for their part of the environment.

How Eon Makes Backup Cost Management Simple

With Eon Cost Explorer API & Dashboard, you get:

- Instant cost visibility across all clouds

- Accurate chargeback/showback reporting

- Early detection of waste or anomalies

Your backup bill stops being a mystery and starts driving action.

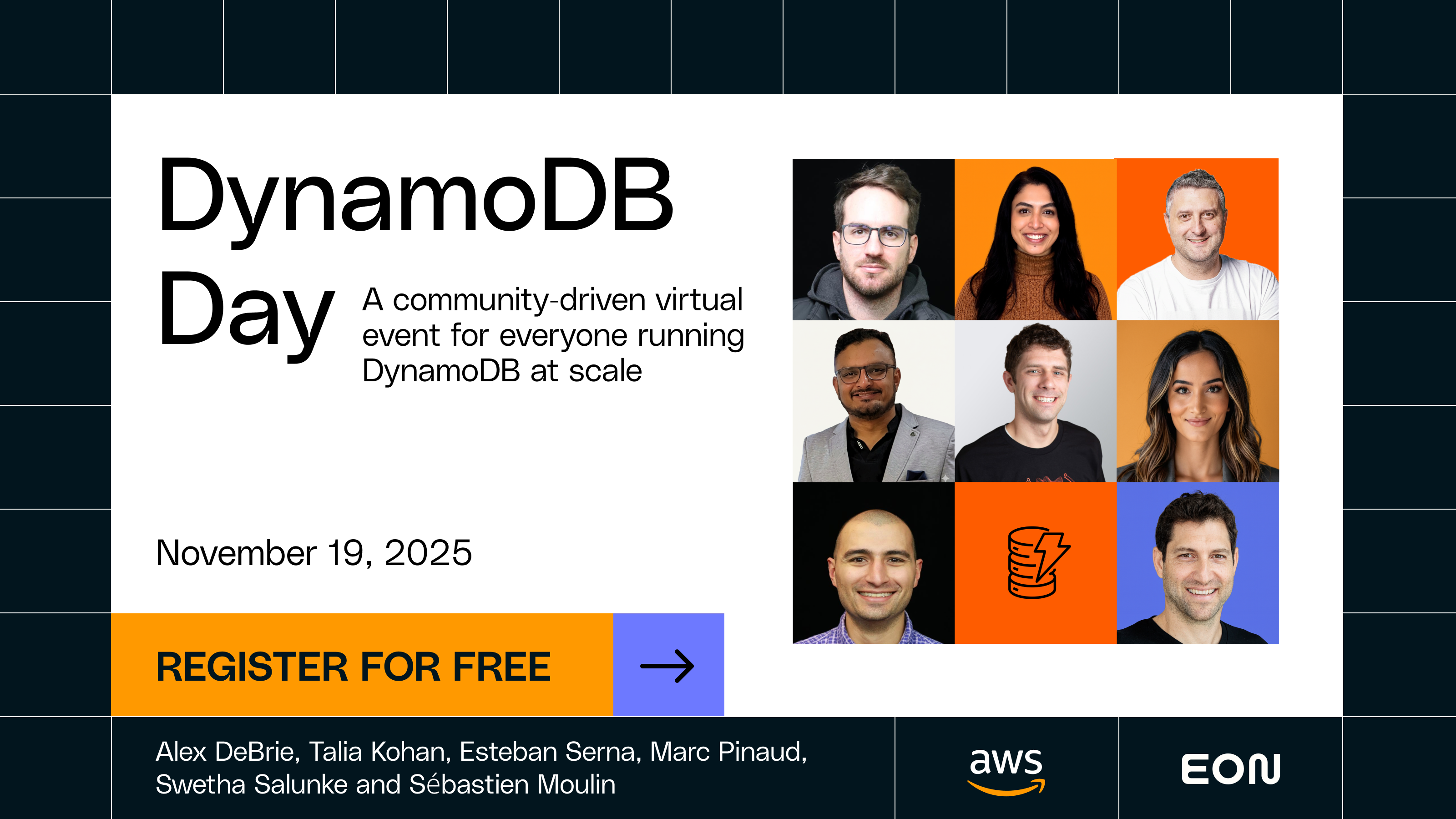

Join DynamoDB Day 2025: Real-World Lessons from AWS and the DynamoDB Community

What Is DynamoDB Day 2025?

DynamoDB Day 2025 is a community-first, free virtual event for the builders and operators behind today’s most scalable applications.

Happening November 19, it brings together AWS experts, DynamoDB practitioners, and engineers running massive workloads to share real-world lessons on performance, cost, and resilience.

Who Should Attend DynamoDB Day?

Anyone building, operating, or optimizing workloads on DynamoDB, including:

- Database Engineers and Backend Developers

- SREs, DBAs, and Platform Engineers

- Cloud Architects and DevOps professionals focused on performance, cost, and resilience

Even if you can’t join live, everyone who registers gets access to the full session recordings.

What You’ll Learn at DynamoDB Day

1. What’s New in DynamoDB (Marc Pinaud, AWS)

Explore the latest DynamoDB features, real-world use cases, and adoption patterns shaping how modern teams build and scale applications.

2. Cost-Aware Data Modeling (Alex DeBrie, DynamoDB Book Author)

Learn how to model data for predictable performance and billing—and avoid the design mistakes that quietly drive up RCUs and WCUs.

3. Migrating to DynamoDB Using AI-Guided Workflows (Esteban Serna, AWS)

See how to migrate a live application from MySQL to DynamoDB with AI-guided workflows for modeling, refactoring, and data movement.

4. How Ubisoft Scales DynamoDB for Millions of Players (Sébastien Moulin, Ubisoft Online Services)

Learn how Ubisoft migrated 20 billion records with zero downtime using AWS CDK, Lambda, and DMS—plus how their teams continue to optimize and scale for millions of players worldwide.

5. Protecting and Recovering DynamoDB Data (Ron Kimchi, Eon)

Discover what actually works—and what doesn’t—for keeping DynamoDB data resilient, secure, and recoverable at enterprise scale.

6. Running DynamoDB at Scale (Panel Discussion)

Hear from Alex DeBrie, Esteban Serna, Swetha Salunke (AWS), Talia Kohan (Postman), Liore Shai (Eon), and Devarpi Sheth (Capital One) on how real teams monitor, tune, and protect DynamoDB in production—and how AI is shaping the next generation of operations.

Why Attend DynamoDB Day 2025

Because it’s one day of real, hard-won lessons from AWS and the DynamoDB community.

You’ll walk away knowing:

- How to model data for predictable performance and cost

- How teams handle scaling and incident response in production

- How to modernize from relational to DynamoDB with AI-guided tooling

- How to keep DynamoDB resilient, compliant, and recoverable

- How global enterprises like Ubisoft and Capital One design for scale, reliability, and performance

How Eon Supports DynamoDB Teams

DynamoDB teams rely on Eon to protect and unlock the value of their backup data.

Eon’s cloud backup and recovery platform automates DynamoDB backups, keeping them immutable, queryable, and cost-efficient—turning backup data into a usable resource for analytics, compliance, and resilience.

We’re proud to partner with AWS and the DynamoDB community to make resilience, recovery, and data access simpler for everyone.

Learn more about Eon’s Fast, Flexible Amazon DynamoDB Backups.

How to Register for DynamoDB Day

- Event Date: November 19, 2025

- Cost: Free

- Location: Virtual (live + on-demand recordings)

- Register here

Even if you can’t make it live, registering ensures you’ll receive all the session recordings afterward.

DynamoDB Day FAQs

Is DynamoDB Day really free?

Yes. Registration and recordings are 100% free.

Do I need to attend live?

No. Register and you’ll automatically get all the session recordings.

Who’s hosting DynamoDB Day?

It’s a community-driven event featuring AWS, DynamoDB experts, and partners like Eon.

Will there be Q&A or networking?

Yes. Each session includes live Q&A, and attendees can interact with speakers throughout the event.

How do I join?

Just register here — we’ll send your access details and reminders before the event.

Over-Backing Up Your Cloud Data? Cloud Backup Posture Management (CBPM) Is the Fix

Why Over-Backing Up Cloud Data Is So Common

Redundant cloud backups are surprisingly common, and they’re draining more than just your budget.

Cloud storage makes it easy to back up everything. Sometimes too easy. Without guardrails, manual tagging mistakes, broad retention policies, and “just in case” habits pile up into mountains of duplicate data.

The fallout? Budgets balloon, recovery slows, compliance confidence drops, and FinOps reporting turns into a guessing game.

The fix isn’t more copies—it’s a smarter backup posture management. That’s where Cloud Backup Posture Management (CBPM) comes in: automating policy enforcement and keeping only the backups that matter.

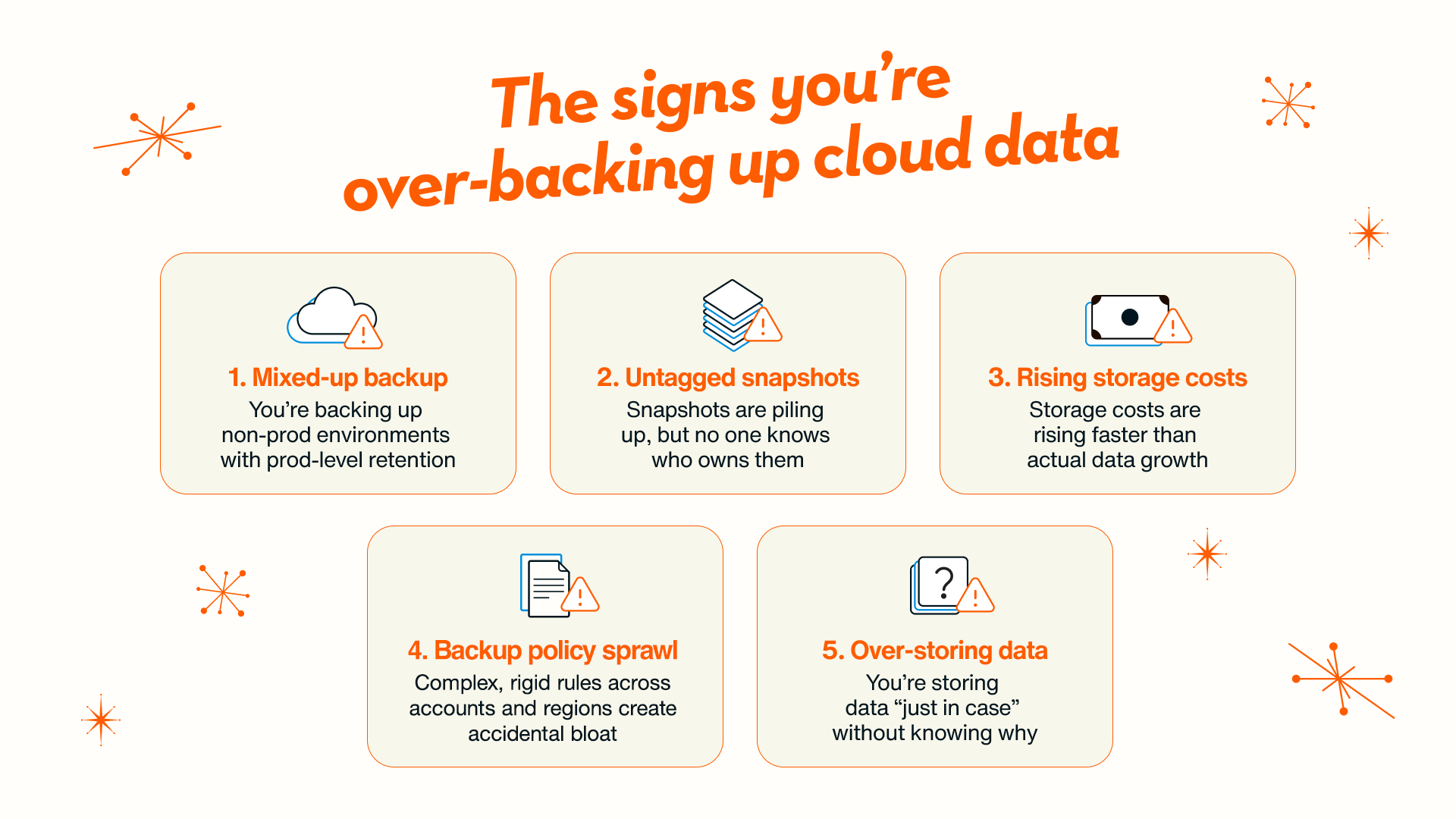

5 Signs You’re Over-Backing Up Cloud Data

No one sets out to over-back up, but in fast-moving cloud environments, it happens faster than you think. Tagging slips, policies misfire, retention drags on too long, and visibility drops to zero. Before long, costs and complexity spiral.

1) Mixed-Up Backup Types

One forgotten checkbox, and suddenly your dev environment is treated like mission-critical prod—and you don’t notice until the bill lands. Tags drift out of date, policies follow suit, and costs spike.

2) Untagged Snapshots

Snapshots pile up, but no one knows who owns them. Without accurate ownership, they stay in storage indefinitely, quietly adding to your bill.

3) Rising Storage Costs

Your storage bill is climbing faster than your actual data growth, often because you’re paying for redundant or stale backups you’ll never use.

Related webinar: Demystify the hidden costs of cloud data retention

4) Backup Policy Sprawl

Juggling multiple accounts, regions, services, and teams is a recipe for chaos. Policies become overly rigid or endlessly complex, with overrides and legacy rules no one dares touch because “they might be important.” One bad setting, like retaining hourly snapshots of staging for a year, can triple your storage footprint.

5) Over-Storing Data

Fear of losing data keeps backups around far longer than they should. That 180-day retention policy for last year’s sprint? Still sitting on your bill, quietly eating budget. Without a central view, zombie backups pile up like digital junk drawers, and traditional tools won’t even alert you when you’re backing up the same volume across three different policies.

What Are the Hidden Costs of Redundant Backups?

Extra backups do more than inflate storage — they slow operations, muddy compliance, and bog down recovery.

- Skyrocketing Storage Bills: Over-retention can boost costs by 50% or more, often hidden inside aggregated bills that make it impossible to pinpoint waste.

- More Complexity for DevOps: Every extra backup is another policy to maintain. The more you add, the harder it gets to untangle.

- Compliance Confusion: Retaining logs and snapshots for seven years when the actual mandate is only 18 months inflates cloud backup compliance costs and doesn’t guarantee audit readiness.

- Slower Recoveries: Which of the 15 daily backups is the “good” one? More copies aren’t better…they’re just…more. And when the clock’s ticking, that means delays.

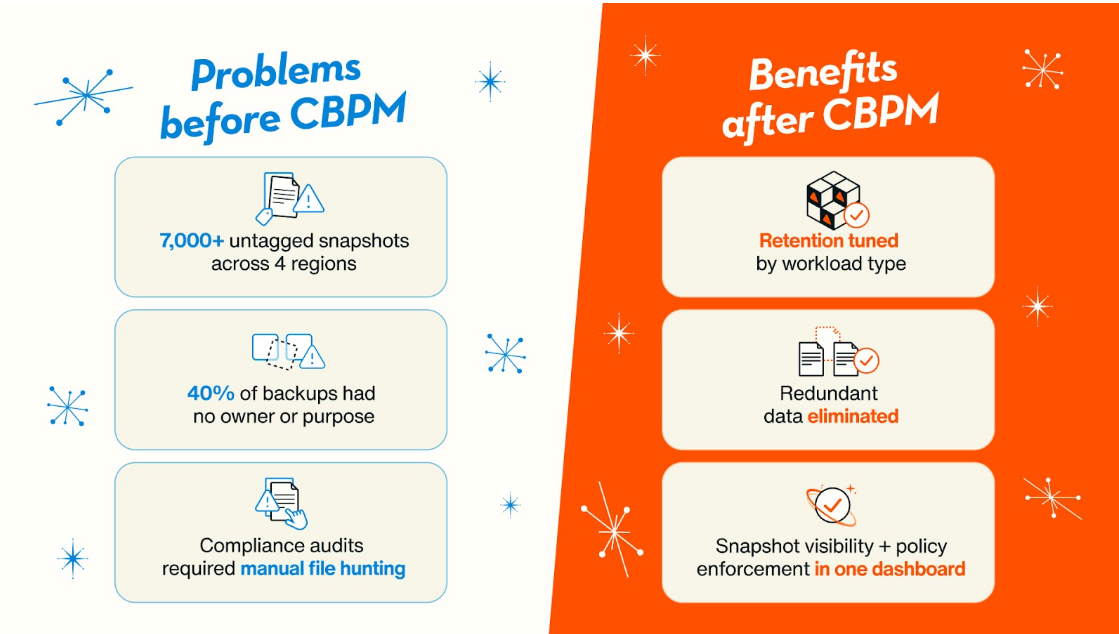

How Does Cloud Backup Posture Management (CBPM) Help?

CBPM replaces guesswork with automation. It continuously monitors your environment, applies the right retention, and stops unnecessary duplicates before they ever hit your bill.

Key CBPM benefits:

- Keep the right copies and delete the rest.

- Automate tagging and policy enforcement with no manual upkeep.

- See exactly what’s backed up, where, and why.

- Attribute costs per backup so FinOps teams can make fact-based retention and duplication decisions.

- Give you the visibility and alerts traditional tools lack, including spotting redundant policies before they cost you money.

For a step-by-step look at how to do Cloud Backup Posture Management, see our full guide.

Example:

A dev environment spins up in us-west-2 without tags. Traditional tools either miss it or apply the wrong retention. CBPM spots it instantly, tags it by workload type, applies a short retention policy, and prevents the surprise bill.

The Bottom Line: Stop Over-Backing Up and Start Optimizing

Backup should protect your business, not drain it. CBPM keeps what’s important, cuts what’s not, and makes recovery faster.

Example in action:

StructuredWeb, a channel marketing SaaS serving IBM, Zoom, and others, used Eon’s CBPM to cut through backup clutter and lower costs:

“Eon has completely revolutionized our cloud backup strategy, providing the efficiency and scalability needed to support our growing list of enterprise customers. By eliminating complexity and reducing costs, Eon enables us to allocate more resources to innovation and business growth.”

— Daniel Nissan, CEO, StructuredWeb

🚀 Take Control of Your Cloud Data Retention

Over-backing up your data doesn’t protect your business—it drains it. The fastest way to fix it?

Learn how to right-size retention policies, eliminate waste, and stay audit-ready in our Cloud Data Retention session.

Save your spot for the Cloud Data Retention webinar and start cutting costs without risking compliance.

No results found

Try a different category and check back soon for more content.