Resources

All the latest, all in one place. Discover Eon’s breakthroughs, updates, and ideas driving the future of cloud backup.

What Cloud Backup Should Look Like When Built for Multi-Cloud Resilience

Cloud storage is simple—until it’s time to back it all up across AWS, Azure, and Google Cloud. Yet, single-cloud strategies leave gaps, create compliance risks, and lock you in.

This guide shows you how to design, implement, and manage multi-cloud backups that actually work, without drowning in manual effort or runaway costs.

Before you start designing, it helps to understand what multi-cloud backup means and why it matters for enterprise teams. Learn the fundamentals in our multi-cloud backup basics guide or read on.

What Is a Multi-Cloud Backup Strategy?

A multi‑cloud backup strategy stores copies of your data across multiple cloud providers while enforcing centralized policies for security, retention, and compliance.

Why it matters:

- If one provider goes down, your data remains safe elsewhere.

- Orchestration is required to avoid missed backups, untagged resources, and unnecessary egress costs.

- Native tools, like AWS Backup, Azure Backup, and Google Cloud Storage Transfer, don’t coordinate cross-cloud backups on their own.

- Eon acts as the multi-cloud control plane, automating retention, policies, and compliance alignment.

Benefits of multi-cloud backup include:

- Redundancy and availability: Multiple locations reduce total data loss risk.

- Compliance and sovereignty: Choose storage regions that align with regulations like HIPAA, PCI-DSS, and GDPR. Explore how to stay audit-ready and reduce compliance costs.

- Vendor lock-in mitigation: Keep flexibility instead of being tied to a single ecosystem.

- Cost optimization: Mix storage tiers and providers for better cost control.

- Best-of-breed access: Let each team use the provider that fits their workloads without creating backup chaos.

Knowing the “why” is only half the story. Next comes the “how.” Here’s how to structure a real-world multi-cloud backup architecture.

How Should You Design a Multi-Cloud Backup Architecture?

Design a multi‑cloud architecture by defining each provider’s role, setting retention and recovery goals, and mapping how backups flow across clouds.

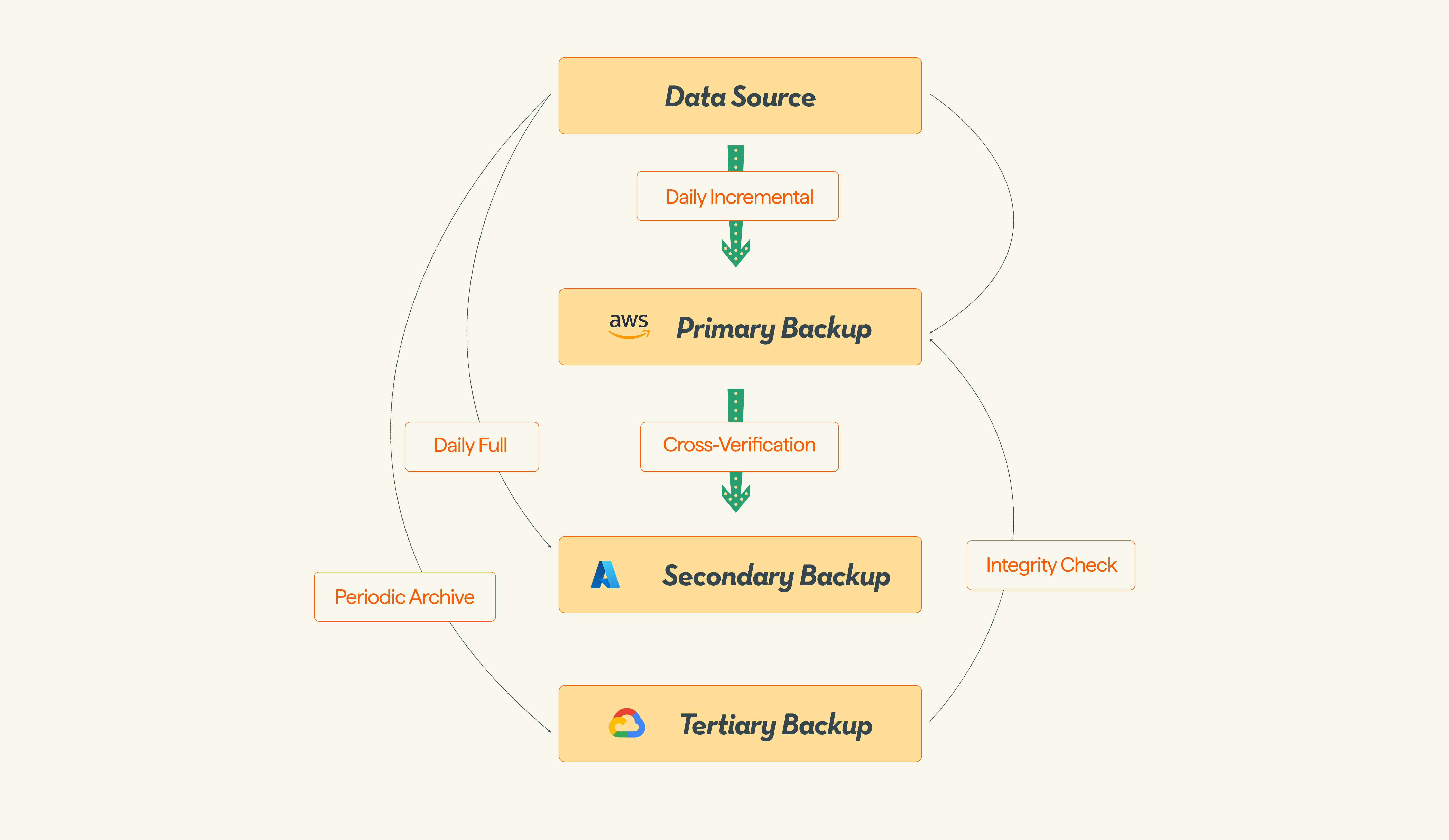

Imagine you're implementing a tri-cloud model: AWS as your primary, Azure as your second, and GCP as your backup target. This setup isn't arbitrary. It's designed to maximize resources, optimize costs, and diversify risk.

Let’s take a look at the roles and benefits of each cloud provider. The table below lets you see the bigger picture at a glance:

Note: While Azure may appear to run full backups on schedule, it uses incremental mechanisms behind the scenes for storage efficiency. This ensures that only changed data is transferred after the first full backup.

With your architecture mapped out, the next step is making sure it actually works in practice without gaps, missed workloads, or unexpected costs.

Related: See how Innago cut 40% of backup costs on AWS with Eon

How Do You Ensure Multi-Cloud Backups Work?

You can ensure multi‑cloud backups work by mapping data flows, automating policies, and using centralized orchestration to prevent gaps or drift.

1) Map Data Flow

Track how data moves between origin and backup to uncover bottlenecks and meet recovery objectives.

2) Set Backup Cadence and Retention

Align frequency and duration with your RTOs (recovery time objectives) and RPOs (recovery point objectives).

3) Verify Infrastructure Readiness

- Classify data: Identify critical vs. non-critical resources.

- Secure access & encryption: Map IAM roles and KMS keys across clouds to avoid unprotected workloads.

- Plan network & cost: Account for bandwidth, egress, and lifecycle tiering to control costs.

4) Implement and Orchestrate

Once you've mapped your data flow and set up your architecture, implementation becomes all about straightforward, repeatable steps:

- AWS (primary backup): Use AWS Backup to schedule daily incremental snapshots. Automate lifecycle policies to transition older backups from S3 Standard to S3 IA to Glacier.

- Azure (secondary backup): Configure Azure Backup for VMs and databases. Set up policies that balance frequent incremental backups with long-term retention.

- GCP (tertiary backup): Use the Storage Transfer Service for periodic archive backups. Choose storage classes (Nearline, Coldline, or Archive) that optimize for long-term, low-cost retention.

- Cross-cloud orchestration: Use a centralized platform like Eon to manage job scheduling, status monitoring, and recovery seamlessly across clouds without juggling separate third-party tools.

Without a centralized solution, IT teams must manage backup jobs, IAM keys, encryption, and retention separately in each console.

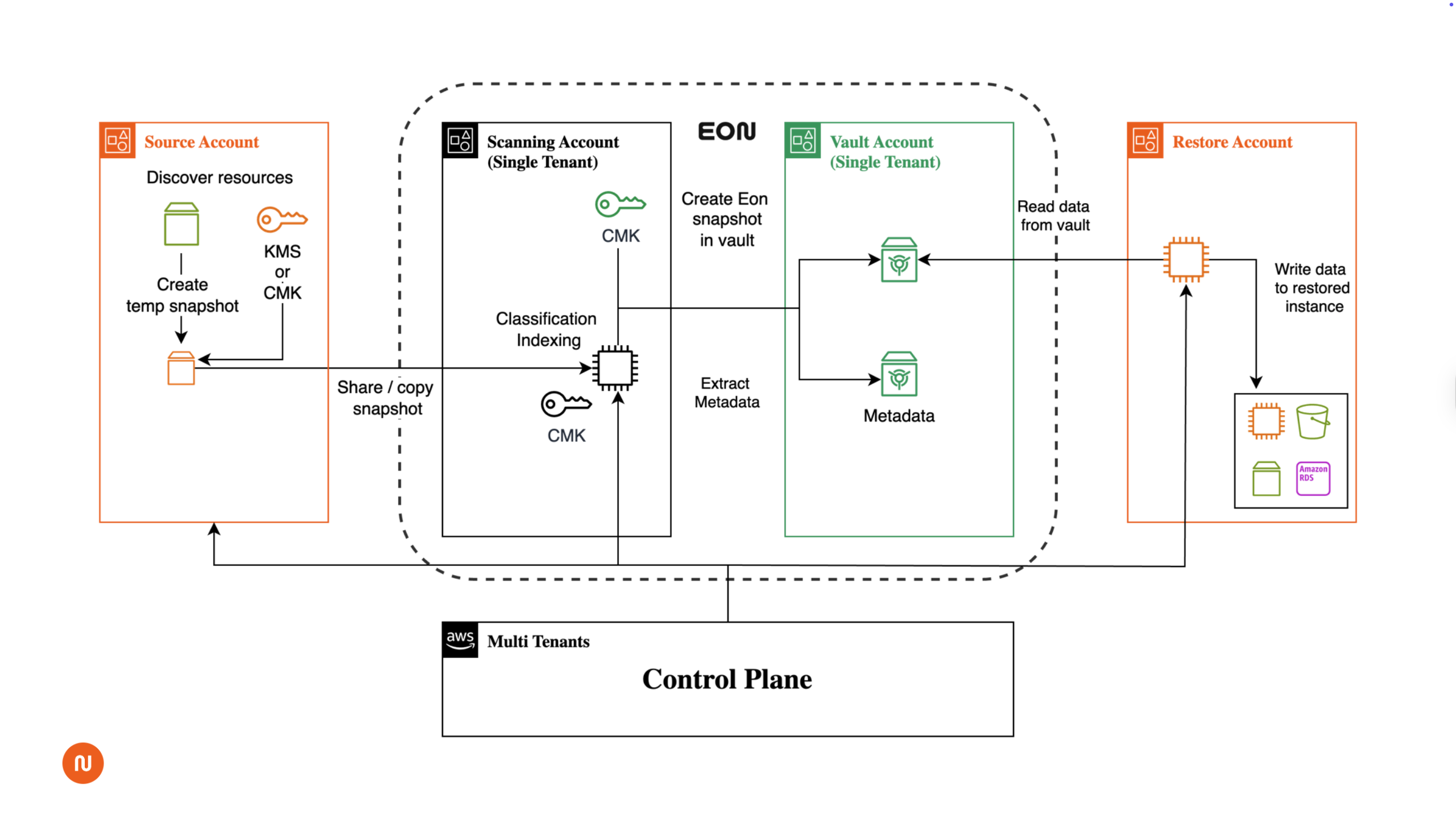

Eon automates this end-to-end:

- Policy drift detection: Identify unprotected workloads or expired retention rules.

- Cross-cloud policy enforcement: Keep RPO/RTO aligned across AWS, Azure, and Google Cloud.

- Chargeback and lifecycle analytics: Track costs by cloud, project, or department.

Learn how Cloud Backup Posture Management (CBPM) keeps backups aligned and compliant across all clouds.

Designing and implementing backups is only half the battle. The real test of your strategy is whether you can recover when it matters.

How Do You Test and Validate Recovery?

Validate recovery by running regular simulations and using automated test restores to confirm backups meet your RTO and RPO.

- Simulate failures regularly or use Eon’s automated test restores to validate recovery quickly without manual intervention. This generates audit‑ready logs and keeps your runbooks up to date.

- Prioritize recovery tiers: Restore critical workloads first, then supporting systems.

- Maintain runbooks that stay up-to-date with every test.

- Human approval may still be needed for production cutover, but automation keeps the process fast and consistent.

Regular testing and tiered recovery workflows ensure you meet RTO and RPO when it counts.

Related: Watch how instant recovery works in our live demo.

Even the best backup plan can fail if it isn’t actively managed. Staying compliant and controlling costs requires continuous visibility and smart automation.

How Can You Keep Multi-Cloud Backups Cost-Effective and Compliant?

Manage cost and compliance by monitoring backups continuously, enforcing retention policies, and flagging backup sprawl before it drives up spend.

- Continuous monitoring: Native tools like CloudWatch, Azure Monitor, and GCP Monitoring help, but Eon unifies alerts and job statuses in one dashboard, so you don’t have to jump between consoles.

- Alerting: Identify failed jobs or anomalies before they become incidents.

- Cost visibility: Eon’s built-in chargeback and lifecycle reporting tracks storage, egress, and retention spend across all clouds automatically.

- Backup sprawl management: Eon flags redundant or expired backups that inflate costs.

- Analytics-ready backup lake: Store backups in Parquet or Delta Lake format to run compliance checks and cost analysis without restoring the data.

Automated monitoring, drift detection, and lifecycle analytics prevent costly sprawl and compliance gaps.

As your environment scales, juggling policies and dashboards across multiple clouds becomes impractical. That’s where centralized backup management comes in.

How Can You Centralize Backup Management Across Clouds?

Centralized management lets you monitor, enforce, and recover backups across AWS, Azure, and Google Cloud from a single platform like Eon.

Eon provides a unified view of your backup environment across AWS, Azure, and GCP and simplifies management and reduces risk via:

- A unified dashboard: Monitor and manage backups across providers in one interface—no more jumping between cloud consoles.

- Automated policy enforcement: Ensure consistent backup frequency, retention rules, and encryption settings.

- Easy recovery: Use one-click, granular restore options to recover individual files or full systems across clouds.

- Compliance and audit trails: Maintain tamper-evident logs and detailed reporting to support both industry regulations and internal governance.

By combining these practices—architecture planning, automated orchestration, testing, and central management—you create a multi-cloud backup strategy that’s both resilient and easy to manage.

Conclusion and Next Step

A robust multi-cloud backup solution protects you from data loss, downtime, and compliance risk without drowning your team in manual effort.

Remember:

- Plan your architecture around RTO, RPO, and cost visibility.

- Automate policy enforcement and drift detection to avoid gaps.

- Use Eon to centralize management and turn your backups into an audit-ready, analytics-friendly asset.

Ready to simplify your multi-cloud backups?

Schedule a demo with Eon to see how to cut recovery times, eliminate backup sprawl, and stay audit-ready.

Eon Now Offers Fast, Flexible Amazon DynamoDB Backups

Backing up Amazon DynamoDB can be a pain. Eon’s new Amazon DynamoDB support brings cost-efficient and intelligent protection to one of AWS’s most widely used NoSQL services.

Whether you're dealing with terabytes of real-time order data or high-velocity microservice logs, Eon now gives you control, speed, and visibility across your backups, making recovery faster.

Why is backing up Amazon DynamoDB so difficult?

Backing up Amazon DynamoDB can be surprisingly hard, especially at scale. Native options like AWS Backup and Point-in-Time Recovery (PITR) come with serious limitations:

- You can’t just recover one record—you have to restore the whole table.

- Backups can only be retained for 35 days, which won’t cut it for audits or compliance.

- You can’t back up across regions—just restore into one.

- Every scheduled backup is a full copy, whether anything changed or not. That’s hours of restore time and terabytes of duplicated data.

- You can’t query backup data down to the record without Global Secondary Indexes (GSIs). DynamoDB tables can only be queried on the partition and sort keys.

It’s no wonder many teams end up skipping backups or settling for brittle manual workflows.

What You Can Do with Eon for Amazon DynamoDB

Eon is built to solve the real challenges of backing up Amazon DynamoDB without the usual complexity.

1. True Incremental Backups

Eon only back up what’s changed, so you’re not storing the same data over and over again. That means:

- No over backing up: only store what you need based on business and compliance requirements.

- Lower backup storage and recovery costs: Eon’s efficient low-cost storage tier helps you cut costs so it’s actually a self-funding project..

- Achieve compliance fast: Eon’s autonomous backup platform automatically applies the right retention policy.

Whether you're backing up once a day or once an hour, Eon keeps Amazon DynamoDB backup storage costs predictable and efficient.

2. Record-Level Restore

No more restoring 10TB of data to fix one mistake. Eon lets you rewind to any point in time and recover exactly the record you need.

You can even send that record to a different table, region, or AWS account—without touching the rest. This enables Amazon DynamoDB recovery to be faster, cheaper, and more targeted. From disaster recovery to compliance fixes, or those “oops” moments when you just need a single item back, Eon lets you instantly access your backups.

3. Query Backup Snapshots Like a Database

Amazon DynamoDB doesn’t support rich querying unless you’ve built the perfect schema and GSIs up front. But most developers can’t predict every future use case.

Eon turns your Amazon DynamoDB backup snapshots into queryable datasets—automatically. Run SQL-like queries on your backup data to:

- Analyze trends (e.g., find all orders with

status != completed). - Investigate anomalies or deletions.

- Extract custom datasets for reporting or compliance.

Note: This query capability is different from restoring specific records. Querying lets you explore or analyze your data—even if it wasn’t originally built for that kind of access—without relying on GSIs.

4. API-Powered Recovery Workflows

Need to recover recent records to a table in another region? With Eon’s API, you can automate the moment and grab just what you need to get back to business fast.

Here’s how customers are using it:

- Start by authenticating securely with Eon’s API.

- Run a query to pull only the records they need, such as last month’s data.

- Automatically insert those records into a new table in another region or account.

- Bring production back online in minutes—not days.

These workflows make Eon ideal for Amazon DynamoDB disaster recovery scenarios where speed, scale, and precision matter.

All of this sits on a compliance-grade foundation with immutability, long-term retention, RBAC, audit logs, and cross-region recovery. The basics are covered, so you can focus on the hard parts: precision restore, queryable backups, and automation.

How to Back Up Amazon DynamoDB (Without Losing Your Mind)

No more duct-taped scripts or crossed fingers. Here’s how we help you skip the clutter and stay in control:

- Only back up what’s changed—no full snapshots every time.

- Restore just the records you need in seconds.

- Search backups like a database without GSIs or extra setup.

- Keep costs low with efficient storage and fewer restore steps.

- Set backup rules once and apply them across accounts, tables, and regions.

- Keep backups for as long as you need across regions, with no 35-day cap.

Whether you're preparing for an audit or bouncing back from a failure, Eon helps you stay ready, recover fast, and move on.

Back Up Amazon DynamoDB with Eon

Already using Eon? You’re covered. Just define your Amazon DynamoDB backup policy, and we’ll handle the rest—incremental snapshots, recovery options, and even schema indexing for analysis.

Here’s how Eon makes backup simple from day one:

- Discover all Amazon DynamoDB tables across your environments.

- Apply smart backup policies automatically.

- Restore what you want, when you need it—without vendor lock-in.

Tip: For data where sub-second RPO is required, some teams pair AWS PITR (with short retention, like 7 days) with Eon for long-term Amazon DynamoDB backups, cost optimization, and flexibility.

Amazon DynamoDB Backups That Work Like Your Team Does

It’s how backup should work: fast, flexible, and built for how real teams operate. And the foundations are non-negotiable: immutability, long-term retention, RBAC, audit-ready logs, and cross-region/account recovery—built in.

Want to see it in action? Let’s talk. We’ll show you how Eon makes backing up Amazon DynamoDB simple, fast, and finally frustration-free.

Frequently Asked Questions (FAQ)

What is the best way to back up Amazon DynamoDB?

The best way to back up Amazon DynamoDB is to use a solution like Eon that supports incremental backups, lets you recover specific records, and gives you full query access. This reduces cost, speeds up recovery, and enables richer data use cases beyond what AWS PITR and scheduled backups offer.

How long can I retain Amazon DynamoDB backups?

Amazon’s Point-in-Time Recovery only supports backup retention for up to 35 days. With Eon, you can retain Amazon DynamoDB backups for as long as your compliance, analytics, or operational needs require.

Does Eon also cover compliance and resilience requirements for Amazon DynamoDB?

Absolutely. Eon includes immutability, unlimited retention, RBAC, audit-ready logging, and cross-region/account recovery as standard. Those are table stakes—we just go further with record-level restore, queryable backups, and API-driven workflows.

Multi-Cloud Backup Challenges (and How to Fix Them Without More Tools)

What Makes Multi-Cloud Backup So Hard?

The challenge isn’t whether backups exist—it’s whether you can manage and recover them confidently—a reality cloud architects and SREs feel most when they’re the ones on the hook for recovery.

For example, one team running workloads across AWS and GCP thought they were covered until a compliance review revealed sensitive data with no encryption and a misconfigured 7-day retention policy. The backups were there, but without centralized visibility, the blind spot went unnoticed.

This pattern repeats across enterprises. Complexity hides flaws until recovery, audit, or security events expose them. To avoid surprises, you need posture: a single source of truth to classify, audit, and enforce backup policies across clouds.

1. Why does versioning fail across clouds?

Manual snapshot management across AWS, GCP, and Azure almost guarantees version sprawl and gaps.

2. Why are backup policies inconsistent?

Each provider has its own retention and backup rules—enforcing a standard schedule across all of them is nearly impossible without central control.

3. Why is recovery unreliable?

Scattered backups mean scattered recovery workflows. Without a unified dashboard, restores are slower, riskier, and harder to test—leaving IT managers and disaster recovery leads unable to validate restores when it really counts.

4. Where’s the single source of truth?

If you can’t search or audit backup data globally, compliance and governance grind to a halt.

5. How do security gaps creep in?

Ransomware increasingly targets backups themselves, not just production systems.

6. Why is compliance harder in multi-cloud?

Retention, residency, and encryption settings vary across providers, creating hidden governance risks.

7. Why do costs spiral?

Cross-cloud transfers, retention mismanagement, and versioning waste add up fast.

8. Why are logical air-gaps so complex?

Isolating backups from production with software-defined separation adds operational overhead and requires strict discipline.

Takeaway: These issues map directly to the three pain points we see most often at Eon:

- Discovery: Blind spots from manual tagging or siloed infra.

- Management: Misaligned retention, waste, and compliance risk.

- Restoration: Slow, expensive, or failed recoveries.

What Is Cloud Backup Posture Management (CBPM)?

Cloud Backup Posture Management (CBPM) is a new approach to backup management that ensures your backups aren’t just there—they’re protected, compliant, and recoverable.

CBPM delivers:

- Automated classification of sensitive data

- Policy enforcement for retention, encryption, and access

- Centralized visibility across clouds

Think of it as backup with context—turning storage into posture.

How Does CBPM Fix Multi-Cloud Backup Failures?

You don’t need to abandon your multi-cloud ambitions. You just need a backup posture that works across clouds without manual tagging, scripting, or guesswork.

CBPM enforces the right backup behaviors automatically, and at scale. Here’s how it solves the biggest posture breakdowns we see.

1. Automate Tagging

Many teams try to control multi-cloud backups using infrastructure-as-code (IaC) tools like Terraform or Ansible. Others stitch together cron jobs or CI/CD pipelines. These approaches seem efficient until they scale.

The problem? Every cloud provider has different APIs, metadata tags, and retention tools. That makes posture enforcement fragile, error-prone, and time-consuming to audit.

With Eon, automation is built in:

- No cloud-specific code or custom scripting required

- Pre-built policy templates for retention, tagging, and encryption

- Posture automatically enforced across AWS, GCP, and Azure

Takeaway: Skip the scripts. Eon gives you plug-and-play backup posture without config drift.

2. Auto-Classify Data at Scale

Manual tagging doesn’t scale. And when tagging fails, backups fail with it, leaving sensitive workloads unprotected or over-backed-up.

Eon replaces manual tagging with:

- Agentless, metadata-driven classification

- Auto-detection of sensitive or regulated workloads

- Policy mapping by workload type, compliance tag, or business unit

Whether it’s internal IP or customer data, Eon ensures it’s properly protected without scripting or tagging guesswork. This is how we free up your DevOps teams from babysitting backups.

Takeaway: Auto-classify what matters. Protect the right data, in the right way, every time.

3. Make Backups Ransomware-Ready with Built-In Isolation

Today’s ransomware campaigns increasingly target backups themselves—a threat CISOs and security engineers know all too well. Posture means nothing if attackers can encrypt or delete your recovery data.

Eon solves this with logical air gaps and native immutability without hardware vaults or complex network overlays.

Built-in defenses include:

- Immutable storage: AWS S3 Object Lock, GCP bucket locking

- Access controls: Role-based permissions and time-bound keys

- Network-level isolation: Dedicated VLANs, firewall rules, and zero external access paths

Eon’s vaulting architecture separates backup data from production environments using software-defined isolation, not DR-style failover infrastructure.

Takeaway: Don’t bolt on air gaps. Eon builds them in so backups stay recoverable even when everything else goes down.

Curious how modern ransomware attacks target backups directly? Check out our cloud ransomware guide for real-world examples and protection strategies.

Should You Still Follow the 3-2-1 Rule?

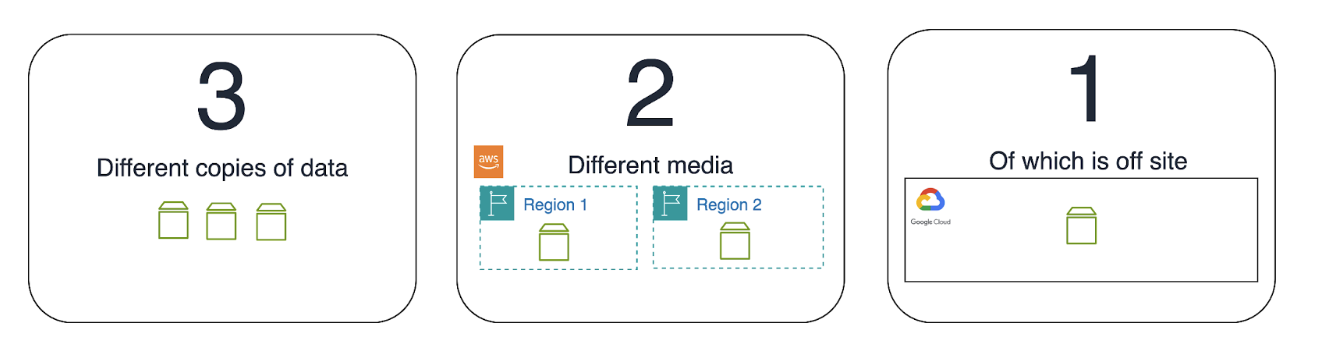

Coined by photographer Peter Krogh in “The DAM Book: Digital Asset Management for Photographers,” the 3-2-1 backup rule essentially means:

- Maintain a minimum of three identical copies of your data.

- Store a minimum of two in separate regions in your CSP.

- Keep at least one copy off-site.

This simple yet powerful strategy helps protect backup data from ransomware, misconfigurations, and cloud-specific disruptions.

But as ransomware threats escalate and cloud environments dominate, modern teams are upgrading to 3‑2‑1‑1‑0, which adds:

- 1 immutable or air-gapped copy to protect backups from deletion or encryption

- 0 errors, meaning verified, testable restore assurance through validation and recovery testing

Organizations are moving beyond the classic rule to strengthen their cyber resilience and avoid surprise failure during recovery.

Treat 3‑2‑1 as your baseline. Adopt 3‑2‑1‑1‑0—immutable copies + validated restores—for modern cloud and ransomware-ready backup posture.

How Do You Manage Access to Backup Data Across Clouds?

Implementing role-based access control (RBAC) and identity and access management (IAM) is no easy task. Managing access policies across clouds is challenging, as each provider has its own APIs and security frameworks—a daily headache for cloud security architects and IAM admins trying to enforce consistent backup access and governance.

With Eon, RBAC is:

- Mapped to data classification: So sensitive workloads automatically get tighter access controls.

- Cloud-agnostic: Works across AWS, GCP, and Azure without rewriting IAM policies.

- Built for governance: Centralized audit trails and permission controls.

Other CBPM Best Practices to Keep in Mind

Other best practices are not specific to multi-cloud, but they’re still important when implementing CBPM:

- Encrypt backup data at rest and in transit.

- Implement KMS key rotation.

- Follow the principle of least privilege (PoLP) to control access to backup data.

- Utilize CBPM tools’ monitoring and logging systems to identify and respond to threats quickly.

4. Control Backup Costs Without Losing Coverage

Backup costs can spiral quickly—especially across clouds. Version sprawl, cross-region transfers, and over-retention quietly inflate spend without improving protection—leaving FinOps teams and cloud cost managers scrambling to explain surprise bills.

Eon helps you reduce costs while tightening posture.

Versioning Waste

- Snapshots of deleted files still incur fees

- Old versions stick around past compliance windows

- Manual expiration policies often fail

✅ Eon manages retention centrally—no versioning required. For more on how to cut AWS S3 costs, see how lifecycle policies and cold storage can reduce your footprint.

Smarter Storage Tiering

- Automatically moves cold data to archive storage

- Expires unnecessary copies based on policy

- Shrinks storage footprint without impacting restore readiness

Hidden Egress Costs

- Keeps backup/restore ops region-local

- Reduces outbound traffic and transfer charges

- Minimizes cross-cloud restores through centralized access

Takeaway: You don’t have to choose between cost control and coverage. Eon gives you both—on autopilot.

Done right, cloud backup isn’t just a safety net—it’s a strategic advantage. Explore how teams are turning backups into a business asset that fuels resilience, insight, and ROI.

How Does Eon Help You Unify and Simplify Multi-Cloud Backups?

If you’re juggling multiple backup tools, cloud-native policies, or compliance requirements across providers, Eon helps unify it all. With continuous, automatic classification, secure vaulting, and a single pane of glass across cloud environments, Eon makes it easy to standardize your backup posture without vendor lock-in or overhead.

Looking to simplify your multi-cloud backup strategy while improving visibility, security, and cost control?

Don’t let multi-cloud backups become your blind spot. Download the white paper to see how teams are enforcing backup posture across clouds without scripts, silos, or surprises.

How to Cut Cloud Backup Retention Costs Without Risking Data

Last week, I joined AWS’s Anthony Fiore for a live webinar where we tackled the messy realities of cloud backup retention and how to fix them without sacrificing safety. If you missed it, you can watch the full recording here or read the recap below.

What problems do teams run into with backup retention?

- Retention drift: A team ships an EC2 app with snapshots set to “forever.” Two years later, you’re paying for hundreds nobody dares delete.

- Protection gaps: Migrations happen, backups get forgotten. Some workloads have no protection at all.

- S3 confusion: Versioning and replication aren’t time travel. A valid delete wipes data; durability won’t save you.

- Inconsistent rules: One team keeps prod for 3 years, another for 45 days. Finance wants 7 years. It’s chaos.

11 nines protects against infrastructure failure—not against a developer mistake, ransomware, or an accidental or malicious delete.

What does posture-aware automation mean (vs. tagging)?

Tags (env, BU, cost center) help, but they’re incomplete and drift over time. This is why many teams are moving toward Cloud Backup Posture Management.

Posture-aware automation:

- Finds all persistent data (S3, EC2/EBS, RDS, etc.)—tagged or not.

- Classifies by context (prod vs. dev, sensitivity like PII or financial data).

- Applies policies to the workload’s posture, not its tags (e.g., Prod + PII ⇒ 1-year retention with immutability).

- Enforces org-wide rules with guardrails (Backup Posture Controls) so teams can self-serve without breaking policy.

Automate based on the workload, not what someone remembered to tag.

How can you cut retention costs in AWS?

1) Classify before you retain

Build an inventory of S3 buckets, EBS volumes, RDS instances, etc. Group by business context, not account. Apply retention tiers: Dev/Test, Internal Prod, Customer Prod w/ PII, Financial Systems.

2) Right-size retention windows

- Dev/Test: 7–30 days

- Typical Prod: 90–365 days

- Regulated/Financial: 5–7 years (with immutability windows, usually 30–90 days)

Start from RPO/RTO and cloud backup compliance requirements. Immutability is powerful—use it sparingly.

3) Automate enforcement

Set org-wide guardrails: “Any prod resource must have ≥ 8 months retention and cross-region copy.” When teams drift, auto-trigger tickets in ServiceNow/Jira.

4) Use AWS-native tools—but tie them to outcomes

S3 versioning, Object Lock, replication, lifecycle policies, and Storage Lens are helpful. But revisit quarterly—cost optimization is a program, not a one-off.

What does a simple retention matrix look like?

Where does Eon fit?

- Built on Amazon S3 durability, but adds time-based recovery and posture awareness across AWS.

- Auto-discovers resources, classifies data, and applies policy at scale—no per-account tweaking.

- Backup Posture Controls give you central guardrails plus team freedom.

- Cost optimizations are baked into every backup method. On average, Eon customers cut their cloud data protection costs by 40%—and with Eon’s Cost Explorer, teams can see exactly what’s driving spend and where to cut it further.

Try the Eon Backup Posture Assessment

We’re teaming with AWS, Google Cloud, and Microsoft Azure on an assessment that scans your org and reports:

- What persistent data you have (by service/account)

- Which protection features are enabled (versioning, replication, cross-region, immutability)

- Gaps, over-retention, and quick wins to cut costs without adding risk

Request your free AWS × Eon Backup Posture Assessment report or explore how peers like Innago cut 40% of backup costs.

Common questions about retention costs

Q: We already use lifecycle policies. Anything left to optimize?

A: Absolutely. Lifecycle policies are a good starting point, but they only move data between storage classes or expire objects. You’ll still want to regularly review how data is accessed, trim immutability windows to match business needs, and confirm retention rules align with workload tiers (dev/test vs. prod vs. regulated data). Think of cost optimization as a continuous process, not a one-time configuration.

Q: Can we group accounts under different rules?

A: Yes. With Backup Posture Controls, you can scope rules by account, OU, tags, or even metadata. That means one business unit can operate under stricter compliance requirements while another follows lighter retention rules—without losing central enforcement. It avoids the chaos of each team inventing their own retention policy.

Q: Is replication the same as backup?

A: No. Replication is useful, but it mirrors everything—including accidental deletes, misconfigurations, and ransomware events. A proper backup gives you point-in-time recovery, so you can roll back to a safe state before the error or attack occurred. Replication keeps data available, but backup keeps it recoverable.

Cloud Backup Posture Management: What It Is and Why You Need It

What is Cloud Backup Posture Management?

In today's world of ever-expanding cloud infrastructure, where new resources pop up faster than you can say "multi-cloud," cloud backup management is becoming increasingly complicated and challenging.

The cloud backup posture management process follows these basic steps:

- Identifying new resources or changes in cloud environments

- Tagging of resources to indicate the type of data (e.g., Personal Identifiable Information, Protected Health Information, Financial Information, and more)

- Applying the appropriate backup policy based on data type

- Backing up accordingly

- [Hit repeat]

Why is CBPM so important?

Many enterprises have long treated cloud backups as a mere insurance policy for when disaster strikes — a static repository of data that just… sits there.

Sure, that may have worked in the past. But those days are over.

Any cloud-reliant enterprise has most likely watched the complexity and scale of today’s cloud environments grow in front of their eyes. As such, CBPM is becoming a critical component of cloud backup, especially as more organizations migrate their applications to cloud providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP).

Some of the inefficiencies and risks of overlooking cloud backup strategy:

- Disorganization and Sprawl: Without proper posture management, backups become chaotic: Multiple copies of some files may be stored unnecessarily, while other critical data may be left unprotected. These unnecessary duplicates waste significant space within backup stores, and make it difficult to determine which version of a file is the most recent or accurate. It’s like having 5 copies of the same DVD, and not being able to tell from the box alone which one has the least scratches on it.

- Slow and Inefficient Retrieval: In a crisis, the ability to quickly retrieve data can make or break a company. So, why do we settle for traditional backup systems, which often lack the organizational framework needed for efficient access? When backups are scattered across different storage solutions or poorly mapped, restoring data becomes a time-consuming process that can prolong downtime and disrupt operations.

- Increased Costs: Redundant and unoptimized backups result in higher storage costs. As data grows, so do the expenses associated with managing it. Without a clear strategy for reducing inefficiencies, businesses may find themselves paying for storage they don’t need.

- Evolving Threats: Traditional backup systems also fail to keep pace with the growing sophistication of security threats. Backed-up data can be an easy target for ransomware and other cyberattacks… and in the case of a ransomware attack, the ability to seamlessly access backups can be the difference between a graceful recovery and return to business as usual, versus a company-tanking disaster.

- Compliance Challenges: As regulations around data management become stricter, businesses must ensure their backups meet compliance standards. Without proper posture management, it’s easy for organizations to fall short of these requirements, possibly resulting in penalties and reputational damage.

There’s no question that cloud storage technology has gotten crazy good — innovations and improvements continue to occur daily.

Cloud Backup Posture Management (CBPM) bridges this gap, offering a comprehensive solution to the challenges posed by traditional backup approaches.

Related: Learn how to cut cloud data retention costs in a live session with AWS storage specialists

The Benefits of Cloud Backup Posture Management

In an era where organizations are increasingly reliant on cloud infrastructure, the stakes for how and where data is stored are higher than ever. A single misstep in backup posture can lead to significant consequences, from compliance penalties to diminished customer trust to the endless frustration of lost data triage.

Consider the financial and reputational damage caused by data breaches or prolonged system outages. In these moments, the effectiveness of a company’s backup strategy can make all the difference. CBPM not only ensures that backups are secure and accessible, but also aligns them with a company’s long-term goals, making them a critical component of a future-proof infrastructure.

Eon’s Approach: A New Era for Cloud Backups

At Eon, we’re leading the charge in reimagining cloud backup management for the digital age. With cutting-edge automation and intelligent mapping, our platform makes CBPM accessible, efficient, and indispensable

- Eon’s one-of-a-kind platform scans and maps cloud environments, which it then uses to apply dynamic, automated backup policies. With these capabilities in place, companies never have to worry about manual tagging and over/under backing up. Um… you’re welcome!

- Traditional backup snapshots are outdated — they place data in a black box that limits companies’ ability to access and strategically leverage the data they need. At Eon, we think data you can’t see is data wasted. So, we want to prioritize data you can actually use. That’s why we empower companies with the ability to query and recover exactly what they need — be it a single table or file — directly from backups.

- Thanks to our platform, cloud-reliant businesses can finally enjoy automated backup posture management — with autonomous scanning, mapping and classification of resources, without unnecessary manual effort. No pain no gain? Nope. Just no pain.

How does Eon’s Cloud Backup Posture Management work?

More than a mere tech solution, CBPM is a proactive, strategic approach to managing cloud backups. Instead of treating backups as a passive archive, CBPM gives backed-up data greater visibility, with automated organizational and retrieval processes and overall optimization. CBPM ensures that backups aren’t just accessible, but also aligned with an organization’s broader cloud infrastructure goals — sounds like something worth celebrating and embracing!

CBPM allows businesses to:

- Map and Organize Resources: Automatically scans and classifies cloud resources, creating a clear map of what’s backed up, where, and why. This ensures backups are logically structured and easy to access when needed.

- Identify Gaps and Redundancies: Spots areas of vulnerability such as unprotected data and eliminates unnecessary duplicates in order to streamline efficiency.

- Enhance Compliance and Security: Ensures backups meet data compliance standards and are protected against threats, safeguarding sensitive information from both internal and external risks.

- Improve Accessibility: Makes retrieving critical data faster and simpler, reducing downtime during emergencies and minimizing operational disruption.

By enabling these capabilities, CBPM transforms cloud backups from an overlooked necessity into a strategic asset, empowering greater confidence and resilience.

Cloud Backups: More than a Mere Backup Plan

Cloud technology has revolutionized how businesses operate, offering unparalleled opportunities for scalability, collaboration, and innovation. So, don’t cloud backups deserve more than a passive role in cloud strategies?

With CBPM, businesses can take control of their data, ensuring that backups are not only secure, but also an integral part of their cloud infrastructure.

Because when it comes to the cloud, your data is only as strong as your backup strategy.

.png)

StructuredWeb Reduces Cloud Backup Restore Time by 98% with Eon

As a leading provider of channel marketing automation SaaS to large enterprises such as IBM, ServiceNow, Google, and Zoom, StructuredWeb needed to streamline its cloud infrastructure backup process by introducing cloud backup posture management (CBPM), enhancing efficiency in IT operations and enabling a stronger focus on strategic data efforts.

Challenge

Before partnering with Eon, StructuredWeb faced challenges in managing their cloud infrastructure backups, including:

- Ongoing, manual classification and tagging of resources became time-consuming and increased the risk of human error.

- Retrieving critical data felt like searching for a needle in a haystack—time-consuming and frustrating.

“Our team is committed to continuously advancing our technology and infrastructure to support large technology enterprises. With Eon, we’ve invested in cutting-edge solutions that provide full visibility into our dynamic cloud resources. By streamlining backup management and optimizing efficiencies, we’ve reduced complexity and costs while enhancing data restoration speed and reliability. This ongoing investment reflects our dedication to delivering the best industry standards for our customers.”

— Daniel Nissan, CEO

Solution

- Full visibility: Thanks to Eon’s inventory view and dashboard, StructuredWeb now has complete visibility into their backed-up resources. No more chasing down vendor snapshots.

- Automated resource scanning and classification: Eon’s platform has simplified StructuredWeb’s manual tagging process, ensuring backups meet both business and compliance needs while cutting costs.

- Instant access to backups: Eon’s database explorer allowed the team to run SQL queries directly on backed-up databases without having to restore full database clusters, saving time and frustrations of a time-consuming restoration process.

Results

With Eon’s cloud backup solution, StructuredWeb experienced impressive results:

- 98% reduction in backup retrieval time.

- 20% less time spent by the IT team on manual classification and tagging.

- Full compliance with industry regulations within 30 days.

- Estimated 40% annual savings in storage and restoration costs.

What They Say

“Eon has completely revolutionized our cloud backup strategy, providing the efficiency and scalability needed to support our growing list of enterprise customers. By eliminating complexity and reducing costs, Eon enables us to allocate more resources to innovation and business growth, ensuring we stay ahead in a rapidly evolving technology landscape.”

— Daniel Nissan, CEO

No results found

Try a different category and check back soon for more content.