Resources

All the latest, all in one place. Discover Eon’s breakthroughs, updates, and ideas driving the future of cloud backup.

The Ultimate Guide to Cloud Backup Posture Management

Cloud Backup Posture Management (CBPM) helps you protect data, stay compliant, and control backup costs—without relying on manual checks or incomplete snapshots.

As cloud environments grow, backup responsibilities often slip through the cracks. A single missed tag or forgotten resource can result in months of unprotected data and significant compliance risk.

CBPM fixes this. It’s a continuous process of discovering resources, classifying data, implementing the right backup policies, and monitoring everything in real-time. When done right, it reduces risk, strengthens resilience, and puts you back in control.

The 4 Phases of Cloud Backup Posture Management (CBPM)

We used to think of CBPM as a checklist of best practices. But in practice, these best practices fall into four clear, repeatable phases—each designed to bring order to your backup landscape, reduce compliance risk, and keep costs under control.

Whether you're managing AWS, Azure, Google Cloud, or all of the above, here’s what it takes to implement CBPM at scale.

Phase 1: Discover and Classify Cloud Resources

You can’t back up what you can’t see.

Start by continuously scanning your cloud environments to detect new or modified resources—whether they’re VMs, databases, object stores, or ephemeral services. Don’t rely solely on infrastructure-as-code (IaC)—untracked infrastructure and repurposed resources often live outside of it.

Once discovered, classify the data these resources hold. Is it PII? Healthcare data? Financial records? Each type has its own backup and compliance requirements. Use consistent tagging based on data type to lay the foundation for policy automation.

Phase 2: Define and Apply Backup Policies

Different data requires different protection levels. Once your resources are tagged, define backup policies based on those tags, including frequency, retention, encryption, replication, and immutability.

Then, apply them automatically. The key is consistency: policies should be enforced across all accounts and clouds, ensuring that nothing is missed and nothing is over-protected.

Without automation, teams often default to backing up everything “just in case,” which drives up costs without actually improving compliance.

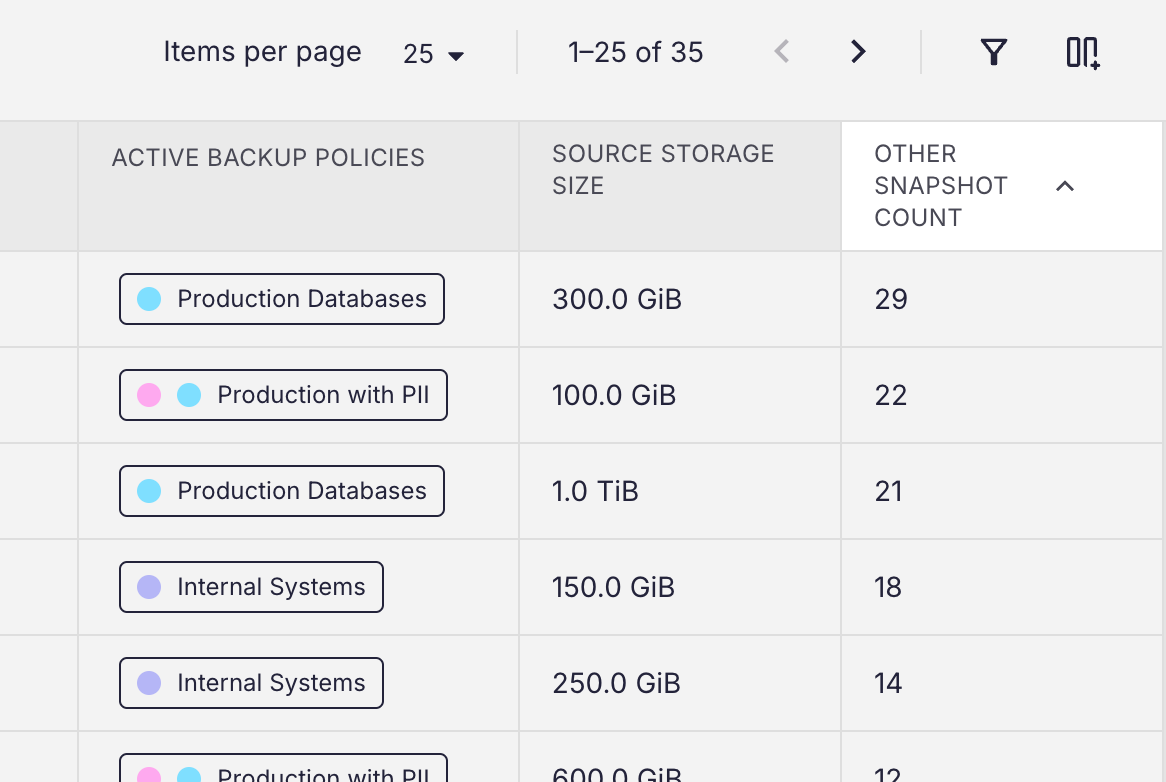

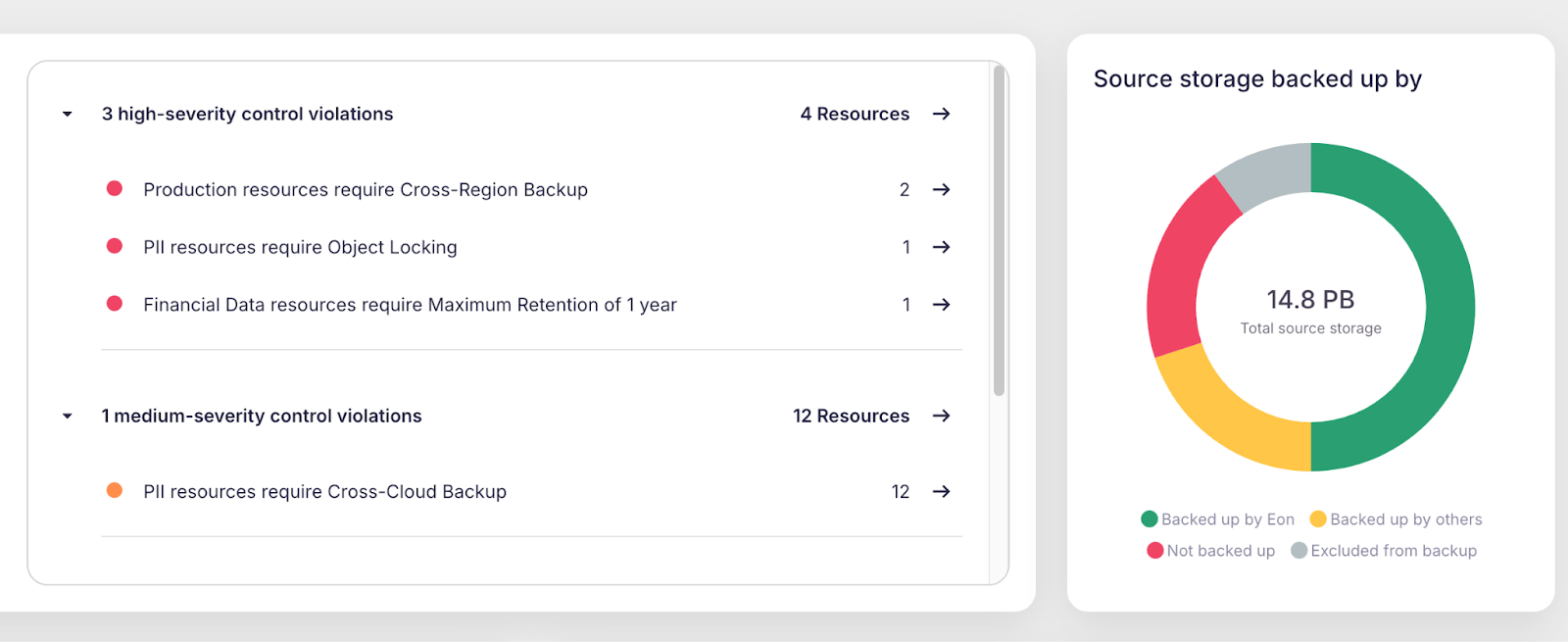

Phase 3: Monitor for Drift and Enforce Compliance

Cloud environments change constantly—resources move, tags disappear, policies drift. That’s why monitoring isn’t optional. Even a single missing tag or misaligned retention rule can result in compliance violations or data loss.

Track backup posture in real-time, detect and remediate misconfigurations, and enforce required settings, such as cross-region replication or retention minimums. This is what prevents silent failures and surprise audit findings.

Look for tooling that consolidates backup and restore alerts across providers and automatically generates audit-ready reports.

Phase 4: Track Costs and Drive Accountability

Cloud backups can quietly become a budget sink if not managed well.

Use cost attribution and chargeback models to map backup spend to the teams or business units responsible. This promotes accountability, helps right-size retention policies, and prevents unnecessary duplication of low-risk data.

When teams see their own backup bill, they’re more likely to optimize it.

Related: Learn how to cut cloud data retention costs in a live session with AWS storage specialists

1) Optimize Storage for Cost and Efficiency

Cloud storage costs can easily spiral out of control. This risk grows even worse when businesses take a "just in case" approach to their cloud backups. Without a savvy backup strategy, companies are liable to over-back up data or retain unneeded data for longer than necessary, leading to inflated costs without adding value.

So, how should teams approach their cloud backup strategy? Start by evaluating what data needs to be backed up and for how long. Data that’s critical to operations or tied to compliance regulations might require longer retention periods, but less critical data can have shorter lifespans. Additionally, eliminating redundant backups and optimizing storage policies can significantly reduce expenses.

By aligning backup retention policies with business needs, organizations not only save money but also make it easier to locate and restore data when necessary. Efficient storage management ensures that every dollar spent on cloud resources contributes directly to operational resilience.

Related: Learn how to cut cloud data retention costs in a live session with AWS storage specialists

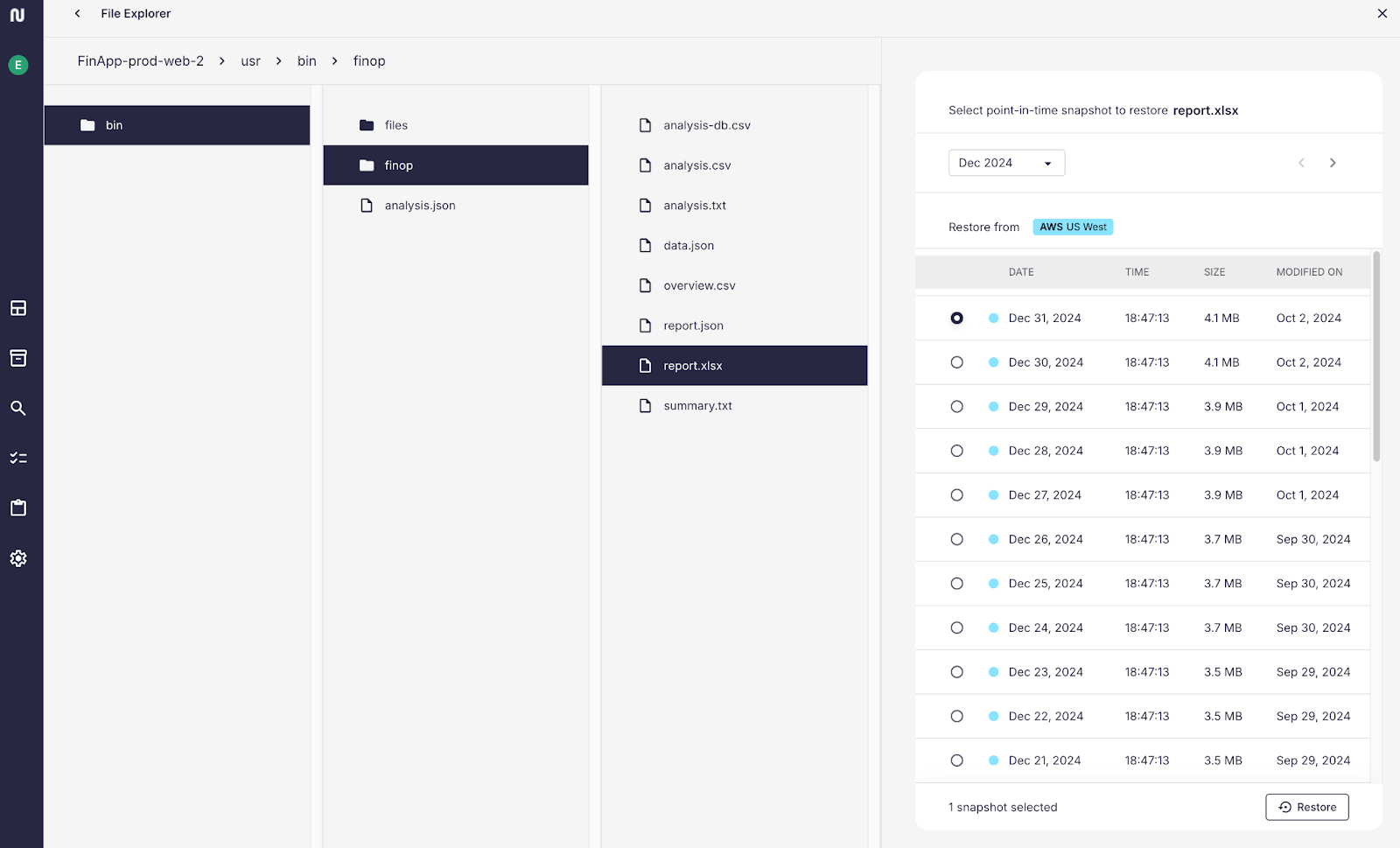

2) Granular Recovery for Rapid Access

When something goes wrong — anything from ransomware threats to data leakage to data center malfunctions — the speed at which a company can recover their data often determines the overall impact on business. Unfortunately, many traditional backup strategies require full-volume restores to access a single file or table — an inefficient and time-consuming triage solution, especially when issues only affect some but not all of a company’s data.

Granular recovery is revolutionizing the way businesses bounce back after a data challenge. With granular recovery capabilities in their tool chest, businesses can pinpoint the exact data they need and recover it without restoring unnecessary files or systems. This saves valuable time, reduces operational disruptions, and allows teams to quickly resume their work.

Imagine a compliance audit that requires the retrieval of specific records from months ago. Instead of sifting through entire databases, granular recovery enables IT teams to locate the relevant information in seconds. The ability to search across backups and recover data at a granular level transforms backups from a static archive into a dynamic tool for operational continuity.

3) Regularly Audit Your Backup Strategy

A "set it and forget it" approach to backups is a recipe for disaster. Indeed, as cloud environments evolve, backups are evermore likely to drift out of alignment with organizational needs.

Regular audits ensure that backup strategies remain effective and up to date.

These audits should include a full inventory of resources to verify that all critical systems and data are protected. It’s also important to assess whether backup policies are being applied correctly and continue to meet the latest regulatory compliance requirements. Testing restoration processes is another essential step — there’s no better way to ensure your backups are reliable than by simulating a real-world recovery scenario.

Frequent audits will help businesses catch potential gaps before they escalate into serious issues, providing peace of mind that data is securely stored but still accessible when needed most.

4) Strengthen Security to Protect Your Backups

Backups are a prime target for cyber threats like ransomware, making security a non-negotiable part of any cloud backup strategy. A multi-layered security approach can safeguard data against both external attacks and internal mishaps.

Key security measures include robust access controls to ensure that only authorized users can access or modify backups. Encryption — for data both at rest and in transit — is also critical for protecting sensitive information. Additionally, anomaly detection systems can alert security teams to unusual activity, such as unexpected spikes in data changes, which may indicate a potential security breach.

Integrating security into cloud backup strategy not only protects a company’s data, but also builds trust with customers and stakeholders. The result is a business that remains trusted, confident, and resilient in the face of evolving threats.

5) Automate to Reduce Complexity

Finally, managing backups manually in a cloud environment is a daunting task, so companies should also keep an eye out for solutions that allow them to automate management of their cloud backup infrastructure. Indeed, the dynamic nature of modern infrastructures — with resources being created, modified, and retired daily — makes manual tagging and configuration nearly impossible to maintain. Automation offers a solution for many of these hurdles.

By automating processes like resource discovery, backup policy enforcement, and real-time monitoring, businesses can eliminate the risk of human error and improve efficiency. For example, automated tools can classify resources and apply the correct backup policies based on predefined criteria, ensuring consistency and freeing up IT teams to spend more time driving innovation.

Automation also offers the benefit of real-time notifications, keeping administrators consistently informed about the health and status of their backup environment. With proactive alerts, potential issues can be addressed before they escalate, ensuring backups remain reliable and secure.

Move Forward with Better Backups

A better, future-proof cloud backup strategy doesn’t have to be overwhelming.

By automating processes, optimizing storage, enabling granular recovery, executing regular audits, and prioritizing security, businesses can transform their backups from a last resort safety net into a reliable and efficient asset that drives value and innovation across the enterprise.

At Eon, we’ve seen firsthand how these strategies can revolutionize backup management — and we’ve built the tools that allow companies to implement them. We’re always here to provide guidance and expertise to help businesses make the most of their cloud environments.

The bottom line? Backups shouldn’t just be an insurance policy — at their best, they can be a strategic tool for success. Make this the year you take your cloud backup strategy to the next level.

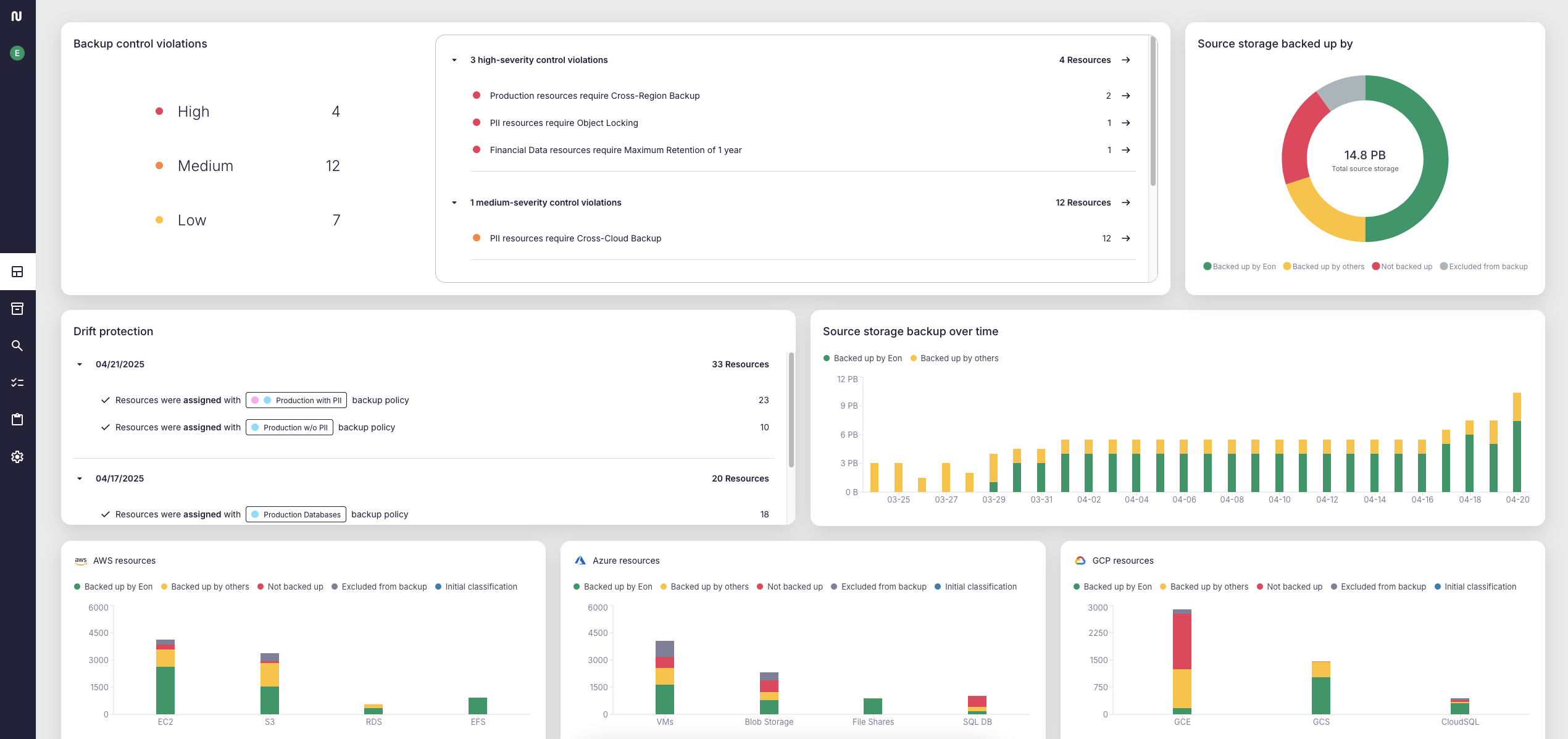

Why CBPM Needs to Be Automated

While the phases outlined above seem clear, putting them all together requires a lot of resources and attention. Tagging automation, policy enforcement, and reporting might sound simple, but stitching together AWS Config, Macie, Audit Manager, Lambda, and other cloud-native tools introduces both cost complexity and operational burden, especially at scale. And that's before accounting for the human time needed to monitor, troubleshoot, and maintain it all.

Automating CBPM with Eon

Building your own CBPM framework is possible, but it’s complex and costly to scale. Even with cloud-native tooling, implementing CBPM at scale isn’t cheap. Supporting services like resource inventory, data classification, and audit logging come with their own cost layers, before you even get to storage. Add in the time for writing scripts, chasing drift, and prepping reports, and the ‘DIY CBPM’ route becomes not just complex, but costly.

That’s where Eon comes in.

Eon is a fully integrated CBPM platform that automates the entire lifecycle:

- Continuous resource discovery across multi-cloud, multi-account setups.

- Automatic sensitive data and environment classification, removing manual error entirely.

- Backup policy orchestration maps directly to compliance frameworks like HIPAA, GDPR, and CCPA. For example, Eon automatically aligns PII-tagged backups with HIPAA retention rules and enables object lock to meet immutability standards.

- Centralized monitoring, audit reporting, and chargeback reporting across your cloud estate.

Eon replaces fragmented, costly workflows with a platform that automates backups and ingests them into an analytics-ready data lake. Your backup storage tier becomes queryable, visible, and easy to manage, so CBPM becomes the easiest part of your job.

Ready to check out Eon’s autonomous CBPM platform?

S3 Backup Cost Optimization: How to Reduce Your AWS Storage Bill

Every cloud budget has a few black holes—S3 backups are one of them. You configure versioning, add Object Lock for compliance, and expect smooth sailing. But then the bill arrives, and suddenly you're asking: Why are we paying so much for backup storage?

The answer usually lies in hidden behaviors and overlooked defaults that silently drive costs. This guide breaks down exactly where those charges come from and how to fix them. And for even more on this topic, join our upcoming live session on How to Cut Cloud Data Retention Costs.

4 Hidden S3 Backup Costs (and How to Fix Them)

1. S3 Versioning and Object Lock

Versioning saves every object change. Object Lock can prevent deletions. Combined, they create a compounding cost problem:

- Old versions pile up.

- Locked objects stay billable.

- Lifecycle rules rarely clean everything up consistently.

Real Talk: Lifecycle policies are great in theory, but in practice, they’re often misapplied or forgotten across siloed teams.

2. Multipart Upload Leftovers

Abandoned multipart uploads accrue costs. They’re invisible in most dashboards and persist unless explicitly cleaned up.

Why it happens: Developers initiate large uploads, but if they fail or get interrupted, those parts linger.

3. Request Costs (GET, PUT, LIST, etc.)

Each API call costs fractions of a cent. However, with millions of objects, especially in log-heavy or analytics workloads, these costs add up quickly.

Common mistake: Frequent listing or PUT operations from automated jobs or pipelines that don’t batch effectively.

4. Cross-Region Replication and Data Transfer

Replicating backups across regions adds egress and storage costs, often without clear visibility.

Red flag: Teams often configure replication once and then forget about it, even when workloads no longer require it.

Related Article: How to Cut Your AWS S3 Costs: Smart Lifecycle Policies and Versioning

S3 Storage Management Tips That Actually Save Money

Use Lifecycle Policies, But Be Sure to Track Them

Automated expiration and transition rules can reduce costs—if they’re applied correctly. Review regularly and validate they’re hitting all object prefixes and versions.

Choose the Right Storage Tier

Archive Carefully

Glacier sounds cheap—until you retrieve too often or delete early. Many teams miscalculate the actual access patterns.

⚠️ Only archive what you’re sure won’t be needed soon.

Monitor and Alert Proactively

Use AWS Cost Explorer or Eon’s analytics to:

- Track cost spikes

- Spot anomalies across buckets

- Forecast future spend based on current behavior

Related Article: How to Protect Your S3 Backups: Advice from an AWS Storage Expert

S3 Backup Mistakes That Drive Up Costs

Misusing Glacier

Accessing cold storage too often? You’re negating the savings.

Misconfigured Intelligent Tiering

Without accurate access patterns, automatic transitions can backfire. Review logs, test setups.

Small Files, Big Costs

Millions of small files = millions of API calls. Bundle data when possible. Use Parquet or ORC instead of CSV or JSON for backups.

Missed VPC Endpoints

Transferring data over the public internet adds avoidable costs. Use S3 VPC Gateway Endpoints where possible, but be aware that they incur per-GB transfer fees.

How Eon Reduces S3 Backup Costs Automatically

S3 gives you the pieces. Eon gives you the system. We bring visibility, automation, and intelligence to your backup cost strategy:

- Real-time analytics across all buckets and storage classes

- Automated enforcement of lifecycle policies and usage guardrails

- Incremental deduplication to reduce versioning bloat

- Tier-aware retention controls based on real access behavior

- Integrated reporting to help FinOps and engineering align on cost drivers

- Delta Lake and Parquet support for analytics-ready backups that reduce small object overhead

Eon vs. Native S3 Versioning

S3 Backup Cost Control: Final Takeaways

Optimizing your S3 backups isn’t about guesswork or manual audits. It’s about control.

With Eon, you reduce cost, increase visibility, and keep backups secure and recoverable without lifting a finger.

Want to see how? Schedule a demo and we’ll show you.

Kubernetes Backups for Amazon EKS: How Eon Compares to Legacy Tools

Amazon Elastic Kubernetes Service (EKS) helps engineering teams scale containerized applications in the cloud, without the burden of managing Kubernetes control plane infrastructure. EKS takes the grunt work out of managing Kubernetes control planes, but when it comes to backing up your stateful workloads, the complexity is very much still alive.

Backing up an EKS cluster, especially one running databases, internal tools, or persistent services, introduces new challenges. You’re dealing with namespace separation, persistent volumes, secrets, and tightly coupled configurations that traditional VM-based backup tools simply aren’t designed to handle.

This post explores why EKS backup is essential for modern cloud-native environments, the gaps in conventional solutions, and how Eon helps you protect your Kubernetes data without creating operational drag.

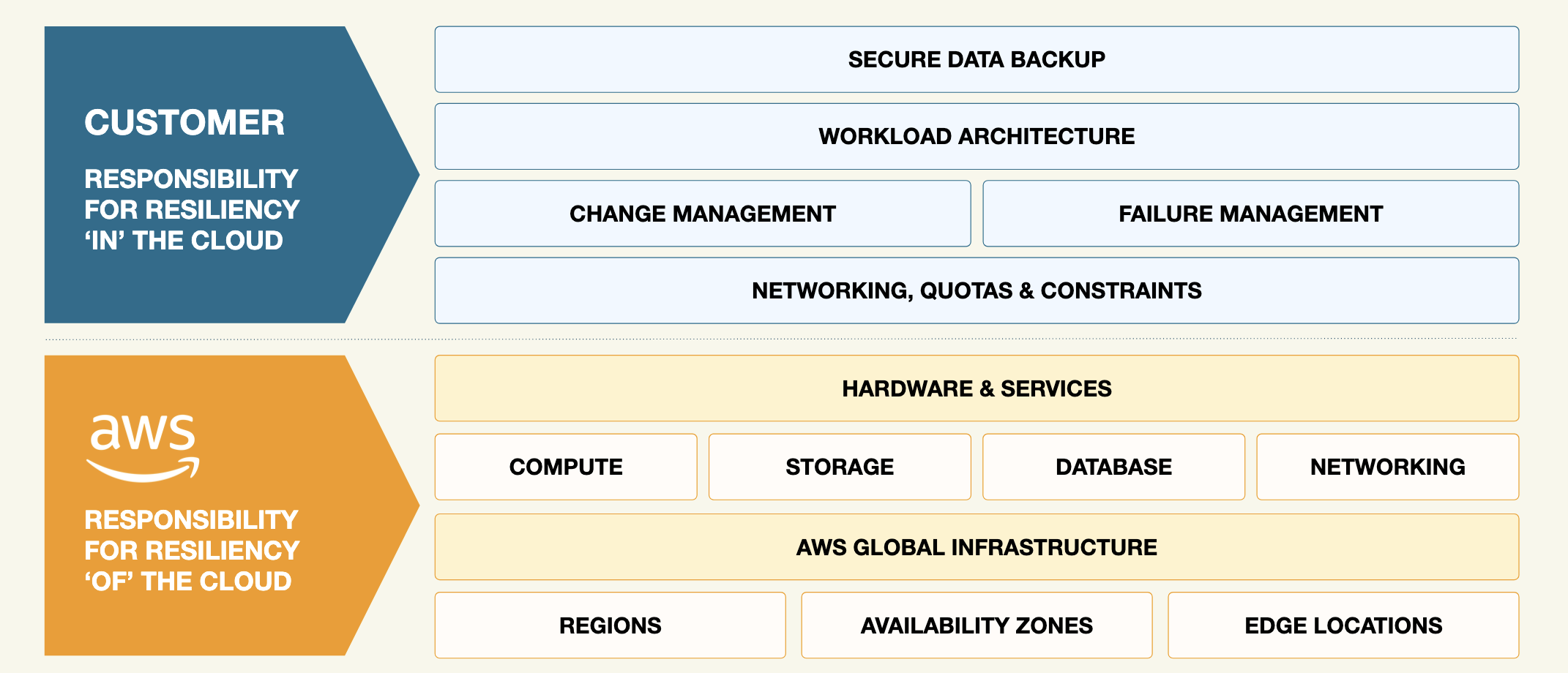

Why isn’t EKS backup handled automatically?

Amazon EKS takes the heavy lifting out of managing the Kubernetes control plane, but that doesn’t mean you're off the hook.

Under AWS’s shared responsibility model, you're still accountable for everything running on your worker nodes. That includes stateful workloads, persistent volumes, and cluster-specific configurations, all of which need a backup strategy that understands how Kubernetes actually works.

In these environments:

- Pods run containers, often with stateful storage

- Namespaces isolate teams/services

- Persistent Volumes (PVs) and Persistent Volume Claims (PVCs) handle durable storage, often backed by EBS

- Secrets store sensitive config like credentials and tokens

Without a purpose-built backup strategy, one wrong config or cluster hiccup can spiral into downtime, finger-pointing, and a lot of YAML you didn’t plan on touching today. That's why a robust Kubernetes cloud backup approach is mission-critical.

What problems do legacy backup tools cause in EKS?

Most legacy backup platforms were built for monolithic applications and virtual machines. They don’t understand Kubernetes concepts like namespaces, PVCs, or pod identity, which makes them a poor fit for Kubernetes backup and restore scenarios.

Here’s where they typically fail:

- Lack of context: Backups don’t capture relationships between resources (e.g., namespace-specific secrets tied to PVCs).

- Storage configuration mismatch: PVCs must be restored into matching environments. Legacy tools often treat volumes as static blobs.

- Limited discovery: They miss critical stateful resources scattered across namespaces and regions.

- Restoration friction: You can’t simply “lift and shift” a resource without realigning it to its original cluster and region.

How does Eon approach EKS backup differently?

Eon was purpose-built for Kubernetes cluster backup and restore, with deep integration for Amazon EKS environments. Our platform understands Kubernetes. We back up data the way your clusters are actually structured, not forcing you to translate them into legacy VM paradigms.

Here’s how Eon stacks up against traditional tools when it comes to managing EKS backup at scale:

Eon vs. Legacy Tools: Feature-by-Feature Comparison

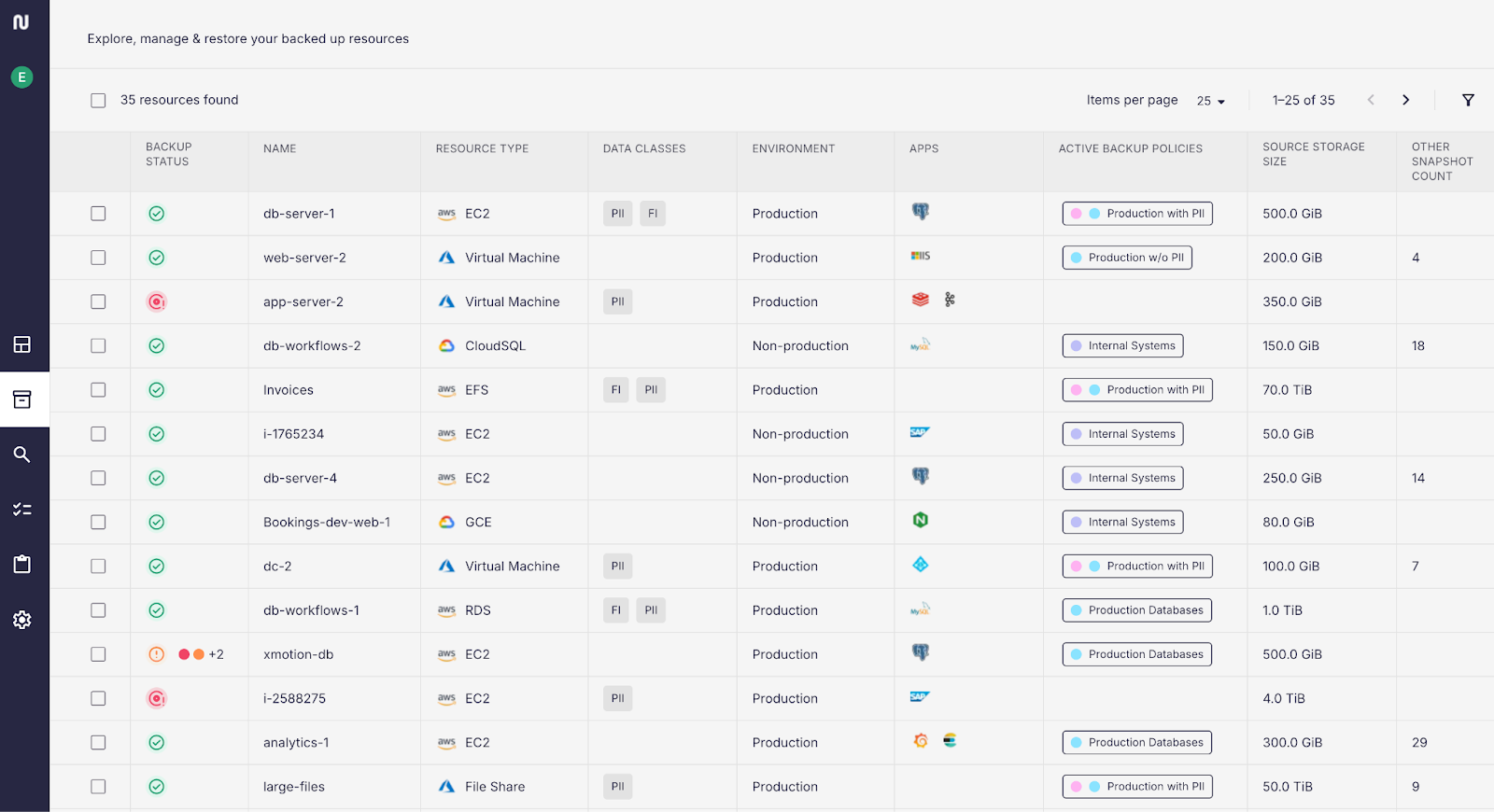

How does Eon discover and organize Kubernetes resources?

Once you grant Eon access to your EKS control plane, the platform automatically discovers backup-eligible resources across your clusters. Each Kubernetes namespace is treated as an independent unit—a practical and secure way to organize backup policies and ensure alignment with how your teams actually deploy and manage apps.

We back up:

- PVCs and PVs tied to stateful workloads

- Secrets for configuration and access

- Contextual metadata like cluster/region info

Can I control what gets backed up and how often?

Not every workload needs to be backed up. Our Cloud Backup Posture Management (CBPM) platform gives you full control without the overhead. You can:

- Automatically ignore stateless resources to reduce noise

- Configure backup frequency, retention, and exclusions per resource or namespace

- Manage policies across multiple clusters and environments from a single view

But Eon goes even further with intelligent data classification. When onboarding your EKS clusters, we analyze each namespace to identify:

- Which applications are running

- Whether it's a production or non-production environment

- If the PVCs contain sensitive data, like PII or credentials

This helps you prioritize what to back up and apply the right policy without guesswork.

💡How does Eon help reduce storage costs and clutter? Eon helps teams manage cost and compliance by retaining what’s important and pruning what’s not. Backups are automatically classified, deduplicated, and optimized. Then, stale snapshots are cleaned up on your behalf.

Related: Learn how to cut cloud data retention costs in a live session with AWS storage specialists

And for data-rich environments, we also support granular database search and restore for workloads running on PVCs—the same powerful restore functionality we offer for EC2-based databases.

You stay in control of your backup posture, without babysitting jobs or writing brittle scripts to fill in tool gaps.

What does Eon’s restore process look like in real life?

The best backups are the ones you can restore fast.

Eon ensures that restoration happens in the right context, meaning:

- Resources go back to the correct namespace, region, and cluster

- Associated configurations, like Secrets, are redeployed seamlessly

- Your team spends less time reconfiguring and more time resolving

Whether recovering from a failed deployment or performing a disaster recovery operation, Eon ensures your Kubernetes backup strategy delivers real-world resilience, not just theoretical safety.

Real-World Example: A fintech company accidentally deleted a critical PVC tied to a Postgres database running in an EKS cluster. With Eon, the SRO team restored the PVC and associated Secrets within minutes, without needing to manually map volumes or redeploy services, avoiding customer downtime and internal SLAs breach.

Key Takeaways: Smarter EKS Backup, Done Right

Amazon EKS simplifies Kubernetes operations, but backing up stateful data remains a complex task

Legacy backup tools aren't built for Kubernetes, leading to misconfigurations, slow recovery, and wasted effort

Eon provides a Kubernetes backup platform that:

- Identifies and backs up PVCs, Secrets, and more at the namespace level

- Optimizes policies for speed, compliance, and efficiency

- Enables recovery with full context and minimal overhead

Ready to Simplify Your EKS Backup Strategy?

Eon makes it easy to protect your Kubernetes workloads without diving into endless YAML, scripting backup jobs, or managing third-party tooling.

Request a personalized demo and see how Eon can transform your EKS backup posture today.

EKS Backup FAQs: What Cloud Ops and SRE Teams Need to Know

Curious about how to back up Amazon EKS the right way? These frequently asked questions cover persistent volumes, Kubernetes backup strategies, and how Eon removes the usual complexity.

What’s the best way to back up EKS persistent volumes?

The most effective way to back up EKS persistent volumes is by using a platform that understands how stateful data is stored and accessed in EKS. Eon automatically discovers and protects Persistent Volumes (PVs) and Persistent Volume Claims (PVCs) at the namespace level so your backup policies stay efficient, accurate, and aligned with how your clusters actually run.

Do I need to back up stateless Kubernetes clusters?

Generally, stateless workloads in Kubernetes don’t need to be backed up. They’re easily redeployed and don’t retain critical data between restarts. But once your cluster includes databases, persistent storage, or configuration stored in Kubernetes Secrets, backup becomes essential. Eon intelligently filters out the noise and ensures only stateful, high-value resources are captured.

How does Eon differ from traditional Kubernetes backup tools?

Most legacy tools were built for VMs, not containers. They treat Kubernetes like just another layer of infrastructure. Eon is different:

- Namespace-level discovery

- Granular policies, automated stale snapshot cleanup, and seamless multi-cluster support

- Context-aware restore that gets data back into the right cluster, region, and namespace

In short, it means less effort, more control, and backups that are actually useful when you need them.

How to Protect Your S3 Backups: Advice from an AWS Storage Expert

In this episode of Cloud Cuts, Eon Co-founder Gonen Stein sits down with AWS Senior Storage Specialist Anthony Fiore to unpack the evolving S3 landscape. From the shared responsibility model to the rise of ransomware threats, they explore what it takes to protect mission-critical cloud data today – and what’s coming next.

Want to hear how AWS thinks about S3 backups?

Watch the full episode

As cloud adoption accelerates, so do the risks and realities organizations must navigate. In particular, the role of Amazon S3 has evolved from simple storage to mission-critical infrastructure, bringing new challenges to light.

Why S3 Is More Critical and More Complex Than Ever

Amazon S3 remains the backbone of cloud storage infrastructure. However, the explosive growth of mission-critical data, along with rising complexity and compliance demands, is reshaping how companies approach S3 backups.

“Everything fails all the time, according to our VP and CTO. So, how do we ensure that we are doing what we can to prepare and protect our cloud resources, especially those that are business critical or sensitive?”

– Anthony Fiore, AWS, Senior Storage Specialist

The notion that “everything fails…” is not a pessimistic view. It’s just the reality of operating the cloud. And with that reality comes an urgent need for smarter, more resilient backup strategies. S3’s impressive 11 nines (99.999999999%) of durability and built-in distribution across three Availability Zones give customers invaluable peace of mind, ensuring that their cloud infrastructure is stable and built to last.

However, durability is no longer the only concern – customers are increasingly focused on protecting against accidental deletions, regulatory non-compliance, sophisticated cloud ransomware threats, and operational complexity at scale. And with AI workloads and cross-region architectures multiplying the volume of stored data, S3 is more vital (and more vulnerable) than ever.

“Durability doesn’t mean immunity from threats. This business reality makes proactive data protection essential.”

– Anthony Fiore, AWS, Senior Storage Specialist

But even with S3’s durability and built-in safeguards, companies can’t afford to assume their data is fully protected. Enter the critical concept of shared responsibility – and the gaps it leaves unaddressed.

Who's Actually Responsible for Your Data?

Even with S3’s proprietary tools, many companies overlook the discrepancy between infrastructure security and data protection, referred to as the Shared responsibility model. Companies can trust their cloud vendor to manage the security of the cloud, but customers are responsible for the security of what they’ve stored in the cloud.

In other words, AWS ensures secure infrastructure, but it is the customer’s responsibility to manage data access, permissions, and backup integrity – a task that requires exacting granular control over user permissions, monitoring anomalies, and ensuring backups are safely stored and recoverable.

“When it comes to data protection in the cloud, AWS and customers each have a role to play... Customers are responsible for managing access to their data, setting the right permissions, and ensuring compliance with industry regulations.”

– Anthony Fiore, AWS, Senior Storage Specialist

To aid in this effort, AWS offers services like Amazon Macie, which uses machine learning to automatically identify and classify sensitive data like PII and financial records. Macie also detects access anomalies and flags exposure risks before they turn into breaches.

How AWS Built S3 to Be Resilient

At AWS, the philosophy is resilience by design. Key features like S3 Versioning, S3 Object Lock, and Cross-Region Replication (CRR) are all available to help customers protect against threats beyond system failure.

- S3 Versioning: Helps recover accidentally overwritten or deleted objects.

- Object Lock: Enforces Write Once Read Many (WORM), bolstering compliance.

- Cross-Region Replication (CRR): Enhances resilience by duplicating objects across AWS regions.

Alongside services like Amazon Macie, these tools form a security model that is inherently multi-layered, but still reliant on customers to implement the right configurations. And while that potent combination of features and solutions offers the bedrock upon which companies can build their cloud operations, customers are still left responsible and in need of additional guardrails and automation, particularly when it comes to backup and recovery at scale.

Addressing Backup Gaps

AWS lays the groundwork for S3 resilience, but many organizations still face critical gaps when it comes to the customer’s side of the shared responsibility model. That’s where Eon comes in.

In a truly symbiotic fashion, AWS provides the foundation while Eon addresses the practical pain points companies face on the customer side of the shared responsibility model. In this episode, Gonen outlines three persistent challenges:

- Discovery: Cloud environments are dynamic, and identifying which resources require backup (and when) is a moving target.

- Management: At S3 scale, traditional backup tools can’t efficiently handle billions of objects or exabyte-sized buckets.

- Access & Recovery: In the event of an incident, it’s often difficult or time-consuming to retrieve only the data that matters most.

Eon’s platform is purpose-built to address these challenges. Here’s how:

- Cloud-Native Deployment: Born in and for the cloud, Eon requires no agents or appliances to install. Customers pay only for what they back up, and setup is as simple as connecting read-only APIs.

- Continuous Resource Classification: Eon’s cloud backup platform continuously scans cloud environments for new or modified resources and auto-applies backup policies – eliminating “backup drift” and reducing risk exposure.

- Globally Searchable, Backup-Optimized Storage: Eon Snapshots are globally searchable and support granular restore. Whether recovering a single file or querying a backed-up database, users can access what they need without restoring an entire snapshot.

“The idea behind Eon is driven by customer pain points under the shared responsibility model – as we built Eon, we wanted to provide effective solutions for customers. Eon is the first cloud-born backup autopilot for enterprise cloud infrastructure.”

– Gonen Stein, Co-founder and President, Eon

Together, AWS and Eon form a layered defense strategy that redefines what effective S3 backup looks like in today’s cloud-first world.

Get More from Your S3 Backups

Eon offers S3 users several meaningful upgrades.

- Cloud Backup Posture Management (CBPM): No more manual scheduling or maintenance, Eon’s backup posture solution is monitored and enforced automatically.

- Granular Restore: Users can recover individual files, folders, or even database records across any backup point-in-time.

- Massive Scale Support: Eon is optimized for S3-scale data: exabytes of information, millions or billions of objects, and multi-region environments.

- Cost Efficiency: Eon stores only incremental object changes, reducing storage waste. It also eliminates the need for S3 source bucket versioning by providing cross-region backup directly from Eon’s storage layer.

- Ransomware Protection: By separating and re-encrypting data in a logically air-gapped backup vault, Eon provides ransomware-resistant protection, ensuring data remains recoverable even in worst-case scenarios.

A Blueprint for Smarter S3 Backups

S3 is one of the most reliable storage services available, but backups must still be protected, managed, and recoverable. Fortunately, between AWS’s robust front-facing infrastructure and Eon’s intelligent automation layer, organizations can now access a full-stack blueprint for S3 backup success.

Whether your team is dealing with accidental deletions, compliance audits, or the looming threat of ransomware, proactive data protection isn’t just a “nice to have”—it’s mission-critical.

Want to hear the whole conversation?

Watch the full episode of Cloud Cuts: S3 Backups with AWS’s Anthony Fiore

Register for the next episode of Cloud Cuts Live: How to Cut Cloud Data Retention Costs

Eon’s Cloud Backup Platform Overview

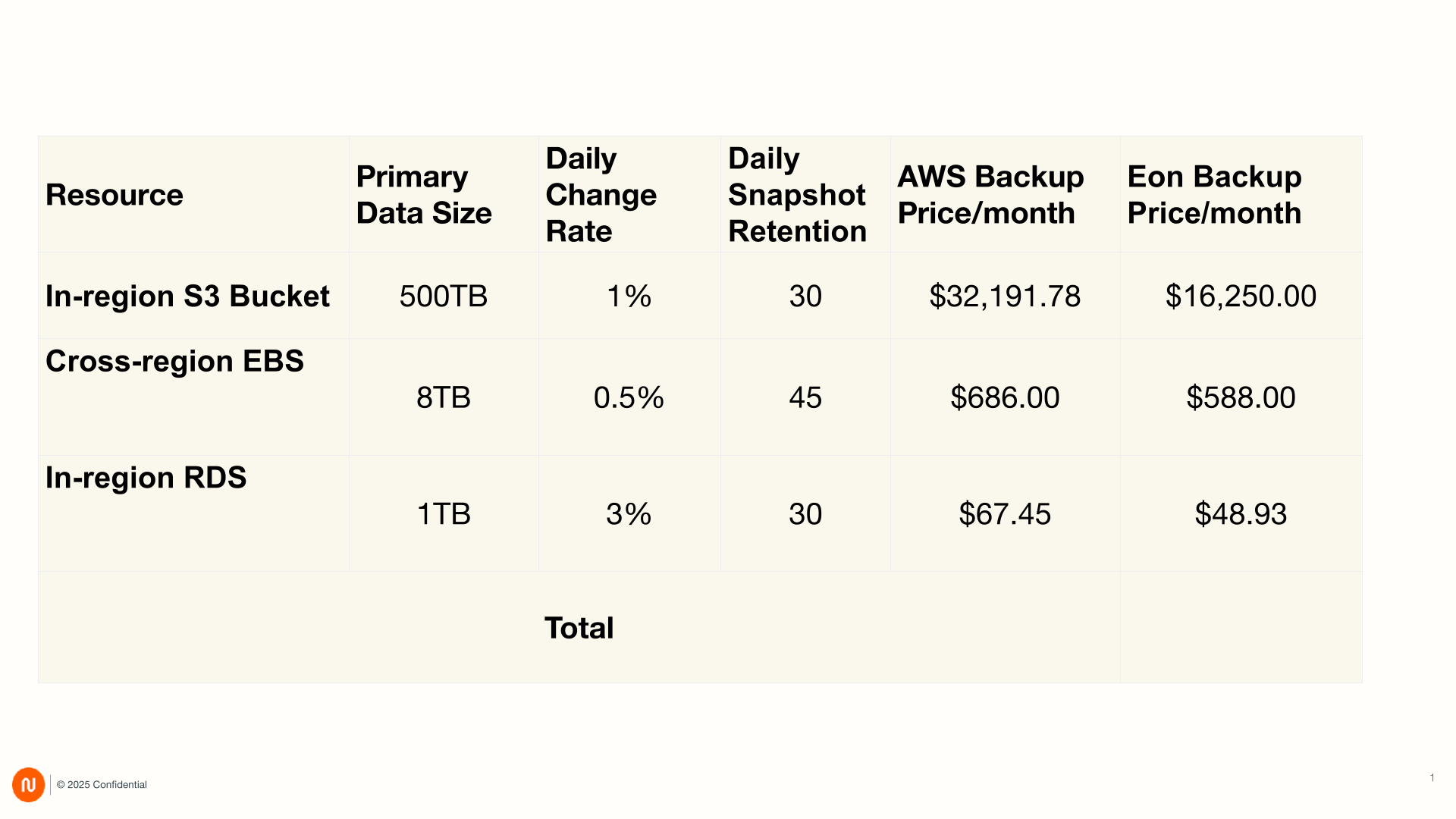

When evaluating backup solutions, Total Cost of Ownership (TCO) is a critical factor for enterprises to consider. TCO extends beyond the upfront costs to include the hidden expenses inherent to legacy data protection strategies.

Why is TCO critical for cloud backup solutions?

Over-backing up data leads to excessive storage costs and resource waste, while under-backing up exposes organizations to severe risk of data loss and non-compliance.

Additionally, traditional restore processes are bulky, unwieldy, and labor-intensive, and tend to incur high costs, both in employee time and cloud compute/storage resources, as entire volumes or database instances need to be restored just to retrieve the specific data sought.

Inadequate backup posture controls ultimately leave organizations exposed to ransomware and data exfiltration, while the lack of global search and granular restore capabilities hinder operations teams’ ability to quickly recover from such events.

A well-optimized backup solution minimizes these hidden costs by ensuring precise data coverage, efficient restore capabilities, and streamlined processes that reduce operational overhead and risk.

Related: Learn how to cut cloud data retention costs in a live session with AWS storage specialists

How the Eon storage tier works

While Eon provides lower TCO for cloud customers overall, adjusting the hidden costs of data protection, we also improve on the most obvious cost of cloud backups: storage itself. Eon is able to save customers up to 50% on cloud backup cost just by converting their existing backups into Eon snapshots. We achieve this by providing a storage tier that is designed to efficiently store backups of cloud infrastructure resources. With both compression and deduplication ingesting data into the storage tier, customers ultimately benefit when switching away from traditional uncompressed cloud snapshots.

The hidden costs of cloud data protection

Let's look at how Eon addresses the hidden costs of cloud data protection, and see how the TCO of Eon stacks up to the competition in a number of dimensions.

Reducing TCO with insight into which workloads should be backed up, and which shouldn’t. According to industry reports, ⅓ or more of IT budgets are wasted.

As the first backup solution to deliver automated discovery, classification, and policy enforcement across multi-cloud environments, Eon significantly lowers the Total Cost of Ownership (TCO) of an enterprise’s backup strategy. By eliminating the need for manual tagging while still allowing existing metadata and tags to be used, customers gain newfound levels of insight into the applications and data they are backing up without the need for any extra configuration or setup.

With this added visibility, Eon’s automated cloud resource discovery helps customers prevent over-backing up of cloud resources. Eon provides a comprehensive view of the current backup posture as it applies to sometimes thousands of cloud resources across accounts, enabling enterprises to identify inefficiencies and risks quickly. With centralized backup policies that apply consistently across all teams and cloud environments, Eon simplifies management, reduces errors, and ensures compliance, ultimately saving costs while improving data protection.

Reducing operational and administrative toil allows technical teams to focus on what they do best

Eon empowers teams to unlock the full value of their backup solution by providing a single pane of glass for an enterprise's entire cloud environment and related backup posture. As an agentless solution, Eon requires no software installation or additional compute resources running alongside production workloads, ensuring minimal disruption to operations while streamlining backup and recovery processes. This equips our customers with an intuitive console interface and well-documented data protection API that requires minimal operational overhead and investment.

A seamless and unified data-protection platform, Eon also eliminates the need for labor-intensive backup projects for individual teams, reducing administrative overhead and freeing up valuable planning and development resources. Eon’s global search and granular restore capabilities allow users to quickly locate and retrieve specific data without restoring entire environments, saving both operator time and cloud compute/storage costs. This combination of simplicity, efficiency, and accessibility transforms backups from a burdensome necessity into a strategic asset for any enterprise.

Backup posture means being able to respond to and recover from unexpected data breaches and data loss.

Eon enhances these capabilities for every customer, without the need to install any software or purchase any license.

Beyond offering the benefits of a more efficient backup solution, built for the cloud from the ground up, Eon provides additional capabilities that enhance enterprises’ ability to improve security and compliance. For instance, our global search and granular restore capabilities eliminate the need to restore entire environments to recover data, allowing enterprises to quickly recover specific files or database records, saving time and reducing cloud compute and storage costs in the process. These capabilities can save cloud teams an unprecedented amount of manual work, allowing them to rise above the typical operational efficiencies that plague traditional recovery processes and mitigate the high associated costs of these otherwise tedious operations.

Cloud consumption cost savings

Now that we understand the factors that go into TCO analysis of a data protection solution, let’s take a look at a comparison of cloud consumption cost of AWS Backup versus Eon:

In the above table, cloud consumption costs for workloads commonly seen among our customers are compared. Eon improves cost and operation efficiency of enterprise data in many more ways than raw cloud compute and storage costs:

Increased visibility leads to savings from over-backup

When enterprises don’t have a good grasp on their current backup posture, it’s easy for cloud consumption waste to get out of control due to over-backup of non-critical workloads. At Eon, we often find our customers are able to quickly realize significant savings as soon as the proof-of-concept phase, as they review Eon’s automatic discovery of cloud resources:

When Eon’s discovery automatically inventories an organization’s cloud resources, in addition to running automated data and environment classification, it also determines if existing snapshots exist for those resources.

When one Eon customer reviewed their initial environment classification results, they found a large, non-critical workload with over 10,000 snapshots they were previously unaware of. Deletion of the unnecessary snapshots and the setting of an appropriate retention policy saved this enterprise tens of thousands of dollars right off the bat!

Limiting exposure to catastrophic data-loss

According to an IBM report, the average cost of a data breach (including ransomware) is $4.88 million. This represents an increase of 10% from the previous year, the highest ever. Data has already become the single most valuable asset of most businesses.

An insufficient backup posture can be what makes the difference between normal business operations and a critical workload becoming another industry data-loss statistic.

In 2023, a major US casino corporation reported a $100 million loss from an extensive ransomware attack on its operations. Much of the financial loss in such breaches come not from the initial loss of data, but the hit to reputation and lost revenue from stalled operations as IT teams struggle to recover in a timely manner.

Eon’s global search and granular restore capabilities helps enterprises maintain access to backed up data, and the ability to restore it in a precise, efficient, and timely manner. In the case of relational database restores, for example, Eon enables customers to query the backup storage tier and securely restore individual tables or rows into live, running databases. In a ransomware recovery scenario, this can not just save precious time for operations teams, but prevent further data loss from exposing potentially compromised workloads to further attack.

What’s next?

Do you think your organization could benefit from a reduced data-protection TCO with Eon? Book a demo to learn more.

Five Ways to Improve Your Cloud Backup Strategy

Cloud backups are increasingly recognized as a foundational part of smooth business operations, yet with the complexity of modern cloud environments, many organizations find their backup strategies lagging. Whether it’s over-backing up data, struggling with manual processes, or facing rising costs, there’s plenty of room for improvement.

Looking for ways to shake things up and get more value from your backups? You’ve come to the right place. Here are five ways enterprises can optimize their cloud backup strategy and ensure their operations remain resilient and efficient.

1) Optimize Storage for Cost and Efficiency

Cloud storage costs can easily spiral out of control. This risk grows even worse when businesses take a "just in case" approach to their cloud backups. Without a savvy backup strategy, companies are liable to over-back up data or retain unneeded data for longer than necessary, leading to inflated costs without adding value.

So, how should teams approach their cloud backup strategy? Start by evaluating what data needs to be backed up and for how long. Data that’s critical to operations or tied to compliance regulations might require longer retention periods, but less critical data can have shorter lifespans. Additionally, eliminating redundant backups and optimizing storage policies can significantly reduce expenses.

By aligning backup retention policies with business needs, organizations not only save money but also make it easier to locate and restore data when necessary. Efficient storage management ensures that every dollar spent on cloud resources contributes directly to operational resilience.

Related: Learn how to cut cloud data retention costs in a live session with AWS storage specialists

2) Granular Recovery for Rapid Access

When something goes wrong — anything from ransomware threats to data leakage to data center malfunctions — the speed at which a company can recover their data often determines the overall impact on business. Unfortunately, many traditional backup strategies require full-volume restores to access a single file or table — an inefficient and time-consuming triage solution, especially when issues only affect some but not all of a company’s data.

Granular recovery is revolutionizing the way businesses bounce back after a data challenge. With granular recovery capabilities in their tool chest, businesses can pinpoint the exact data they need and recover it without restoring unnecessary files or systems. This saves valuable time, reduces operational disruptions, and allows teams to quickly resume their work.

Imagine a compliance audit that requires the retrieval of specific records from months ago. Instead of sifting through entire databases, granular recovery enables IT teams to locate the relevant information in seconds. The ability to search across backups and recover data at a granular level transforms backups from a static archive into a dynamic tool for operational continuity.

3) Regularly Audit Your Backup Strategy

A "set it and forget it" approach to backups is a recipe for disaster. Indeed, as cloud environments evolve, backups are evermore likely to drift out of alignment with organizational needs.

Regular audits ensure that backup strategies remain effective and up to date.

These audits should include a full inventory of resources to verify that all critical systems and data are protected. It’s also important to assess whether backup policies are being applied correctly and continue to meet the latest regulatory compliance requirements. Testing restoration processes is another essential step — there’s no better way to ensure your backups are reliable than by simulating a real-world recovery scenario.

Frequent audits will help businesses catch potential gaps before they escalate into serious issues, providing peace of mind that data is securely stored but still accessible when needed most.

4) Strengthen Security to Protect Your Backups

Backups are a prime target for cyber threats like ransomware, making security a non-negotiable part of any cloud backup strategy. A multi-layered security approach can safeguard data against both external attacks and internal mishaps.

Key security measures include robust access controls to ensure that only authorized users can access or modify backups. Encryption — for data both at rest and in transit — is also critical for protecting sensitive information. Additionally, anomaly detection systems can alert security teams to unusual activity, such as unexpected spikes in data changes, which may indicate a potential security breach.

Integrating security into cloud backup strategy not only protects a company’s data, but also builds trust with customers and stakeholders. The result is a business that remains trusted, confident, and resilient in the face of evolving threats.

5) Automate to Reduce Complexity

Finally, managing backups manually in a cloud environment is a daunting task, so companies should also keep an eye out for solutions that allow them to automate management of their cloud backup infrastructure. Indeed, the dynamic nature of modern infrastructures — with resources being created, modified, and retired daily — makes manual tagging and configuration nearly impossible to maintain. Automation offers a solution for many of these hurdles.

By automating processes like resource discovery, backup policy enforcement, and real-time monitoring, businesses can eliminate the risk of human error and improve efficiency. For example, automated tools can classify resources and apply the correct backup policies based on predefined criteria, ensuring consistency and freeing up IT teams to spend more time driving innovation.

Automation also offers the benefit of real-time notifications, keeping administrators consistently informed about the health and status of their backup environment. With proactive alerts, potential issues can be addressed before they escalate, ensuring backups remain reliable and secure.

Move Forward with Better Backups

A better, future-proof cloud backup strategy doesn’t have to be overwhelming.

By automating processes, optimizing storage, enabling granular recovery, executing regular audits, and prioritizing security, businesses can transform their backups from a last resort safety net into a reliable and efficient asset that drives value and innovation across the enterprise.

At Eon, we’ve seen firsthand how these strategies can revolutionize backup management — and we’ve built the tools that allow companies to implement them. We’re always here to provide guidance and expertise to help businesses make the most of their cloud environments.

The bottom line? Backups shouldn’t just be an insurance policy — at their best, they can be a strategic tool for success. Make this the year you take your cloud backup strategy to the next level.

Cloud Backup Compliance: What Cloud Ops Teams Need to Know to Stay Audit-Ready and Cut Costs

Why Is Cloud Backup Compliance So Complex?

Backups seem simple—until you're in the cloud, where infrastructure spins up, shifts, and vanishes faster than your tools can keep up.

Compliance is hard, but in the cloud, it’s chaos. You’re backing up against workloads that constantly spin up, scale, and disappear. That’s where it gets tricky.

Each Compliance Framework Has Its Own Backup Requirements

HIPAA governs healthcare, GLBA and PCI DSS cover financial services, and public sector teams must meet frameworks like FedRAMP or CJIS. Then there are industry-agnostic laws like GDPR and CCPA that layer on strict requirements about how—and where—data must be stored.

Most teams can’t see what’s in their environment. As scale increases, they lose track of what’s where and who owns it. Without real-time visibility, they end up over-backing up everything “just in case”—blowing budget, missing gaps, and putting audits at risk.

The core of Cloud Backup Posture Management (CBPM) is knowing what’s protected, proving it, and proactively fixing what’s not.

CBPM means continuous, real-time visibility, enforcement, and control so compliance isn’t a guessing game—it’s verifiable, automated, and audit-ready.

Backups Alone Aren’t Enough to Pass an Audit

Having backups isn’t enough—you need proof. Compliance audits demand more than just stored data; they want evidence of retention, encryption, access controls, and recovery testing. Most teams scramble when audits hit, because stored data alone isn’t enough. You need proof: retention, encryption, access, and restore readiness.

Can you show that your backups succeeded last month? Are you sure sensitive workloads are covered? If not, you’re already out of compliance.

Backup Sprawl Happens When No One Owns the Full Picture

In the cloud, change is constant, and no one has complete visibility. New resources appear without tagging, app teams don’t know what’s stored, and security teams can’t guide backup scope.

To avoid missing something critical, teams over-back up. But no single team owns the full picture, so backup policies drift, critical data gets missed, and no one notices until something breaks or an audit lands.

Cross-Region Backup Requirements Drive Up Costs Quickly

Many compliance standards require geographically distributed backups. That means cross-region copies, egress fees, storage overhead, and added complexity.

Without centralized visibility, teams often over- or under-protect data without realizing it. They burn budget without hitting compliance targets.

Solving backup compliance in the cloud is like puzzling with moving pieces.

Why Cloud Backup Strategies Break Down in Practice

Cloud backups get expensive and brittle—not because the tools are broken, but because teams are stuck juggling priorities that rarely align.

Security wants immutable, redundant backups. Compliance wants audit-ready logs and coverage. Finance needs to control storage spend. Meanwhile, cloud infrastructure never stops changing.

Traditional backup tools weren’t built for that. They assume stability and predictability. So teams try to retrofit static policies onto dynamic environments, creating a tangle of exceptions, gaps, and unnecessary spend.

- Manual tagging is slow and error-prone. Even when done correctly at launch, data in an instance can change, like adding PII or HIPAA data later, without updating the tag. That drift breaks the backup scope and often goes unnoticed until audit time.

- Storage costs keep rising. As data grows and retention windows stretch, backup bills can balloon, especially when teams default to over-retaining just to be safe.

- Security and compliance gaps are common. Without safeguards like air-gapping or consistent tagging, backups become a silent failure point, often discovered only during audits or incidents.

Bottom line: Backups are like any other reliability system—you don’t know if they work until you need them. And by then, it’s too late.

Related: Learn how to cut cloud data retention costs in a live session with AWS storage specialists

Data Compliance Roadblocks

Two companies encountered compliance roadblocks. Here’s what went wrong.

Example 1: Heavy Spending, Still Not Audit-Ready

A global financial firm poured money into multi-region backups to meet GDPR and SOC 2 requirements. But when auditors asked for proof, the team couldn’t confirm whether dynamic workloads had ever been backed up.

Tags were missing, logs were incomplete, and they lacked a centralized inventory to track backup coverage across changing resources. Despite significant spending, they had no clear view of what was protected and what was at risk.

Example 2: Big Bills, Basic Capabilities Missing

A legal claim required a tech giant to restore records from over a year ago, but their backup tool didn’t support filtering or searching. Teams spent weeks digging through data, missing deadlines, burning budget, and still coming up short.

How to Design a Backup Strategy That’s Compliant and Cost-Efficient

You don’t need to reinvent the wheel, but you do need a plan. Ask yourself:

- What kind of data are you working with? Different data types have different rules. Tailor retention accordingly.

- Is your data encrypted, and is your key management solid? Encryption is only useful if the keys are managed, rotated, and restricted properly.

- Who can access your backups? RBAC and scoped IAM policies matter.

- Do you have region-based compliance obligations? Identify what needs to stay local and what can be duplicated.

- Can you prove audit readiness? You’ll need reports showing backup success, policy enforcement, and recoverability.

- Are your backups protected against ransomware? Backups must be immutable, monitored, and isolated from attack paths. Eon enforces immutability by default, so your backup data can’t be altered, deleted, or encrypted—even by compromised credentials.

Learn more in our Cloud Ransomware Guide.

Even with a solid strategy, common pitfalls still derail teams, especially in fast-moving, multi-cloud environments.

Avoid the Seven Common Data Backup Pitfalls

1. Misconfigured or Incomplete Coverage

It’s dangerously easy to miss backup coverage in the cloud, especially with solutions that rely on manual tagging, agent installs, or static scripts. If a new workload isn’t tagged correctly or appropriately onboarded, it won’t be protected, and no one may notice until it’s too late.

In fast-moving environments, change happens faster than manual processes can keep up. Tagging errors, ephemeral workloads, and missed deployments are inevitable unless discovery and policy enforcement are continuous and automated.

2. Over-Retention

Keeping everything “just in case” racks up costs fast, especially across regions, where redundancy means double the storage, double the spend.

Most solutions create duplicate copies, meaning twice the storage, twice the cost, and no intelligence about what really needs that level of redundancy.

Without a way to manage retention and scope regionally, teams either overspend or risk under-protecting critical data. Striking the right balance takes planning—and the right tooling.

3. Underestimating Compliance Requirements

Some teams assume having backups is enough. But auditors want more: proof of retention policies, encryption, access controls, backup logs, and sometimes even evidence that restores actually work.

Preparing for that can mean days or weeks of gathering reports, configuring restore tests, and hoping nothing breaks.

Most traditional solutions make recovery slow and complex, so teams avoid testing until they’re forced to. But audits demand more than data. They require confidence that your restores will work.

4. Vendor Lock-In and Multi-Cloud Complexity

Cloud-native backup tools work fine—until you need to migrate, integrate, or audit across clouds. That’s when you realize how tightly you’re locked in. A cloud-agnostic strategy with open data formats and APIs gives you flexibility across clouds and long-term control of your data.

And if you’re operating in—or planning for—a multi-cloud environment, your compliance burden doesn’t just double. It fragments. Each cloud handles retention and visibility differently, complicating enforcement.

A cloud-agnostic strategy that uses open standards and flexible APIs can reduce friction and ensure backups scale with your architecture.

5. Poor Search and Recovery

Having backups is one thing. Finding what you need is another. Most tools make it harder, not easier: pick a resource, pick a point in time, then search (if supported). And few provide true cross-cloud visibility or metadata-level search across backups. That slows everything down, especially during audits, legal holds, or compliance investigations.

It gets worse with databases: many solutions require a full restore before you can even see what’s inside. That means waiting hours (or days) to retrieve a specific record.

Without fast, cross-cloud search and instant backup visibility, proving data integrity becomes time-consuming, costly, and risky.

Suggested Article: How StructuredWeb cut restore time by 98% and eliminated the need to restore full databases just to query critical data.

6. Inconsistent Tagging

Tagging is the unsung hero of backup visibility. Without consistent tagging, tracking what’s being backed up, what’s missing, and whether the right policies are being applied is nearly impossible.

As environments scale, new resources often lack tags and fall outside backup coverage. This represents a silent compliance and security risk; without enforcement, it only worsens over time.

7. Maintenance

A misaligned IAM policy can break backups, and fixing it often sparks a scramble across teams.

All these issues—retention, tagging, security gaps, audit prep—come down to backup posture: do you know what’s protected, and can you prove it?

Enter Cloud Backup Posture Management (CBPM)

CBPM flips backup from reactive to proactive. It gives you:

- Real-time discovery and classification of cloud resources so even fast-moving, dynamic environments stay in scope.

- Automated policy enforcement to ensure backup coverage, retention, and encryption stay aligned with compliance requirements.

- Centralized visibility and cost control across accounts, clouds, and teams.

- Audit-grade reporting that proves what’s protected, what’s not, and where action is needed.

And that’s exactly what Eon was built for.

How Eon Makes Backup Compliance Simple and Scalable

Eon is designed for the real cloud, where change is constant and compliance can’t be bolted on later. Our CBPM platform removes manual overhead and gives you:

- Self-driving posture management: We discover and classify resources automatically, map them to compliance standards, and enforce policies in real time.

- Proactive remediation: We identify misconfigurations, such as missing retention or encryption, and recommend precise fixes before they become issues.

- Real-time visibility: See what’s protected, what’s not, and where your biggest risks or cost drains live. Stay ahead of audits with auto-generated, always-on reports.

Take the First Step

Cloud backup compliance shouldn’t be this hard. Let’s fix it. With the right tools, you can simplify backup, cut costs, and stay audit-ready.

Let’s walk through it together.

FAQ: What Should Cloud Ops Teams Know About Backup Compliance?

What are the most common cloud backup pitfalls?

Missing coverage from manual tagging, over-retention that drives up costs, backups that fail silently due to IAM issues, and poor restore readiness.

Do backups need to be immutable for compliance?

Yes—immutability protects against ransomware and insider threats. If backups can be altered or deleted, they’re not compliant or reliable.

Is having backups enough to pass an audit?

No. Auditors want proof of backup success, retention policies, encryption, access controls, and restore testing. Data alone isn’t enough.

Why is backup compliance so expensive in the cloud?

Cross-region requirements, over-retention, lack of visibility, and duplicated tools all contribute. Cost grows fast without centralized control.

How do I simplify compliance across AWS, Azure, and GCP?

Use a platform that centralizes backup visibility, automates enforcement, and provides audit-grade reporting across clouds.

No results found

Try a different category and check back soon for more content.